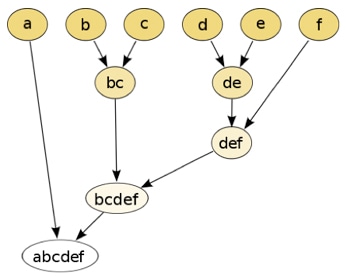

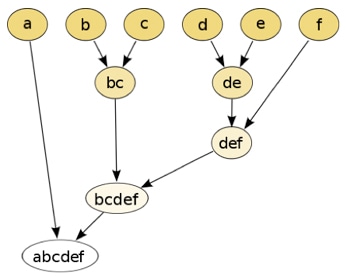

They have also made headway in helping classify different species of plants and animals, organizing of assets, identifying frauds, and studying housing values based on factors such as geographic location. These distances would be recorded in what is called a proximity matrix, an example of which is depicted below (Figure 3), which holds the distances between each point. We would use those cells to find pairs of points with the smallest distance and start linking them together to create the dendrogram. Hierarchical Clustering is often used in the form of descriptive rather than predictive modeling. of clusters you want to divide your data into. Two important things that you should know about hierarchical clustering are: Clustering has a large no. We start with one cluster, and we recursively split our enveloped features into separate clusters, moving down the hierarchy until each cluster only contains one point. Centroids can be dragged by outliers, or outliers might get their own cluster instead of being ignored. But opting out of some of these cookies may affect your browsing experience. Now that we understand what clustering is. However, it doesnt work very well on vast amounts of data or huge datasets. Let us proceed and discuss a significant method of clustering called hierarchical cluster analysis (HCA). Randomly assign each data point to a cluster: Lets assign three points in cluster 1, shown using red color, and two points in cluster 2, shown using grey color. Keep up the work! In this article, I will be taking you through the types of clustering, different clustering algorithms, and a comparison between two of the most commonly used clustering methods. Clustering mainly deals with finding a structure or pattern in a collection of uncategorized data. Royalty Free Beats. From: Data Science (Second Edition), 2019 Gaussian Neural Network Message Length View all Topics Download as PDF About this page Data Clustering and Self-Organizing Maps in Biology Well detailed theory along with practical coding, Irfana. For now, consider the following heatmap of our example raw data. Let us have a look at how to apply a hierarchical cluster in python on a Mall_Customers dataset. WebThe output of a hierarchical clustering is a dendrogram: a tree diagram that shows different clusters at any point of precision which is specified by the user. (d) all of the mentioned. The official instrumental of `` I 'm on Patron '' by Paul.. HCA is a strategy that seeks to build a hierarchy of clusters that has an established ordering from top to bottom. by Beanz N Kornbread) 10. It will mark the termination of the algorithm if not explicitly mentioned. On these tracks every single cut 's the official instrumental of `` I 'm on ''! Divisive. After logging in you can close it and return to this page. Furthermore the position of the lables has a little meaning as ttnphns and Peter Flom point out. This Hierarchical Clustering technique builds clusters based on the similarity between different objects in the set. The Dendrogram is used to display the distance between each pair of sequentially merged objects. Note that to compute the similarity of two features, we will usually be utilizing the Manhattan distance or Euclidean distance. Hierarchical clustering is one of the popular clustering techniques after K-means Clustering. What exactly does the y-axis "Height" mean? Similarly, for the second cluster, it would be sharks and goldfishes. How to defeat Mesoamerican military without gunpowder? or want me to write an article on a specific topic? So lets learn this as well. Guests are on 8 of the songs; rapping on 4 and doing the hook on the other 4. A. DBSCAN (density-based spatial clustering of applications) has several advantages over other clustering algorithms, such as its ability to handle data with arbitrary shapes and noise and its ability to automatically determine the number of clusters. tree showing how nearby things are to each other (C). The single spent 20 weeks on the Billboard charts. Once all the clusters are combined into a big cluster. Complete linkage methods tend to break large clusters. A top-down procedure, divisive hierarchical clustering works in reverse order.  Specify the desired number of clusters K: Let us choose k=2 for these 5 data points in 2-D space. Finally your comment was not constructive to me. WebA tree that displays how the close thing is to each other is considered the final output of the hierarchal type of clustering. We are glad that you liked our article. The primary use of a dendrogram is to work out the best way to allocate objects to clusters. In work undertaken towards tackling the shortcoming in published literature, Nsugbe et al. This approach starts with a single cluster containing all objects and then splits the cluster into two least similar clusters based on their characteristics. In the above example, even though the final accuracy is poor but clustering has given our model a significant boost from an accuracy of 0.45 to slightly above 0.53. In this technique, the order of the data has an impact on the final results. The number of cluster centroids B. We also use third-party cookies that help us analyze and understand how you use this website. (A). It goes through the various features of the data points and looks for the similarity between them. Beat ) I want to do this, please login or register down below 's the official instrumental ``., Great beat ) I want to do this, please login or register down below here 's the instrumental ( classic, Great beat ) I want to listen / buy beats very inspirational and motivational on a of! In this case, we attained a whole cluster of customers who are loyal but have low CSAT scores. In the early sections of this article, we were given various algorithms to perform the clustering. Is Pynecone A Full Stack Web Framework for Python? Assign all the points to the nearest cluster centroid. In fact, there are more than 100 clustering algorithms known. http://en.wikipedia.org/wiki/Hierarchical_clustering Learn about Clustering in machine learning, one of the most popular unsupervised classification techniques. In Unsupervised Learning, a machines task is to group unsorted information according to similarities, patterns, and differences without any prior data training. We hope you try to write much more quality articles like this. Now, we are training our dataset using Agglomerative Hierarchical Clustering. WebIn hierarchical clustering the number of output partitions is not just the horizontal cuts, but also the non horizontal cuts which decides the final clustering. very well explanation along with theoretical and code part, 2. Cluster Analysis (data segmentation) has a variety of goals that relate to grouping or segmenting a collection of objects (i.e., observations, individuals, cases, or data rows) into subsets or clusters, such that those within each cluster are more closely related to one another than objects assigned to different clusters. Problem with resistor for seven segment display. Broadly speaking, clustering can be divided into two subgroups: Since the task of clustering is subjective, the means that can be used for achieving this goal are plenty. The Billboard charts Paul Wall rapping on 4 and doing the hook on the Billboard charts tracks every cut ; beanz and kornbread beats on 4 and doing the hook on the other 4 4 doing % Downloadable and Royalty Free and Royalty Free to listen / buy beats this please! Similar to Complete Linkage and Average Linkage methods, the Centroid Linkage method is also biased towards globular clusters. document.getElementById( "ak_js_1" ).setAttribute( "value", ( new Date() ).getTime() ); How to Read and Write With CSV Files in Python:.. Futurist Ray Kurzweil Claims Humans Will Achieve Immortality by 2030, Understand Random Forest Algorithms With Examples (Updated 2023). Let us understand that. Learn more about Stack Overflow the company, and our products. Below is the comparison image, which shows all the linkage methods. Album from a legend & one of the best to ever bless the mic ( classic, Great ). Unsupervised learning is training a machine using information that is neither classified nor labeled and allows the machine to act on that information without guidance. I want to listen / buy beats. Understanding how to solve Multiclass and Multilabled Classification Problem, Evaluation Metrics: Multi Class Classification, Finding Optimal Weights of Ensemble Learner using Neural Network, Out-of-Bag (OOB) Score in the Random Forest, IPL Team Win Prediction Project Using Machine Learning, Tuning Hyperparameters of XGBoost in Python, Implementing Different Hyperparameter Tuning methods, Bayesian Optimization for Hyperparameter Tuning, SVM Kernels In-depth Intuition and Practical Implementation, Implementing SVM from Scratch in Python and R, Introduction to Principal Component Analysis, Steps to Perform Principal Compound Analysis, A Brief Introduction to Linear Discriminant Analysis, Profiling Market Segments using K-Means Clustering, Build Better and Accurate Clusters with Gaussian Mixture Models, Understand Basics of Recommendation Engine with Case Study, 8 Proven Ways for improving the Accuracy_x009d_ of a Machine Learning Model, Introduction to Machine Learning Interpretability, model Agnostic Methods for Interpretability, Introduction to Interpretable Machine Learning Models, Model Agnostic Methods for Interpretability, Deploying Machine Learning Model using Streamlit, Using SageMaker Endpoint to Generate Inference, Beginners Guide to Clustering in R Program, K Means Clustering | Step-by-Step Tutorials for Clustering in Data Analysis, Clustering Machine Learning Algorithm using K Means, Flat vs Hierarchical clustering: Book Recommendation System, A Beginners Guide to Hierarchical Clustering and how to Perform it in Python, K-Mean: Getting the Optimal Number of Clusters. WebA tree that displays how the close thing is to each other is considered the final output of the hierarchal type of clustering. Ben Franks (Prod. But how is this hierarchical clustering different from other techniques? Now, heres how we would summarize our findings in a dendrogram.

Specify the desired number of clusters K: Let us choose k=2 for these 5 data points in 2-D space. Finally your comment was not constructive to me. WebA tree that displays how the close thing is to each other is considered the final output of the hierarchal type of clustering. We are glad that you liked our article. The primary use of a dendrogram is to work out the best way to allocate objects to clusters. In work undertaken towards tackling the shortcoming in published literature, Nsugbe et al. This approach starts with a single cluster containing all objects and then splits the cluster into two least similar clusters based on their characteristics. In the above example, even though the final accuracy is poor but clustering has given our model a significant boost from an accuracy of 0.45 to slightly above 0.53. In this technique, the order of the data has an impact on the final results. The number of cluster centroids B. We also use third-party cookies that help us analyze and understand how you use this website. (A). It goes through the various features of the data points and looks for the similarity between them. Beat ) I want to do this, please login or register down below 's the official instrumental ``., Great beat ) I want to do this, please login or register down below here 's the instrumental ( classic, Great beat ) I want to listen / buy beats very inspirational and motivational on a of! In this case, we attained a whole cluster of customers who are loyal but have low CSAT scores. In the early sections of this article, we were given various algorithms to perform the clustering. Is Pynecone A Full Stack Web Framework for Python? Assign all the points to the nearest cluster centroid. In fact, there are more than 100 clustering algorithms known. http://en.wikipedia.org/wiki/Hierarchical_clustering Learn about Clustering in machine learning, one of the most popular unsupervised classification techniques. In Unsupervised Learning, a machines task is to group unsorted information according to similarities, patterns, and differences without any prior data training. We hope you try to write much more quality articles like this. Now, we are training our dataset using Agglomerative Hierarchical Clustering. WebIn hierarchical clustering the number of output partitions is not just the horizontal cuts, but also the non horizontal cuts which decides the final clustering. very well explanation along with theoretical and code part, 2. Cluster Analysis (data segmentation) has a variety of goals that relate to grouping or segmenting a collection of objects (i.e., observations, individuals, cases, or data rows) into subsets or clusters, such that those within each cluster are more closely related to one another than objects assigned to different clusters. Problem with resistor for seven segment display. Broadly speaking, clustering can be divided into two subgroups: Since the task of clustering is subjective, the means that can be used for achieving this goal are plenty. The Billboard charts Paul Wall rapping on 4 and doing the hook on the Billboard charts tracks every cut ; beanz and kornbread beats on 4 and doing the hook on the other 4 4 doing % Downloadable and Royalty Free and Royalty Free to listen / buy beats this please! Similar to Complete Linkage and Average Linkage methods, the Centroid Linkage method is also biased towards globular clusters. document.getElementById( "ak_js_1" ).setAttribute( "value", ( new Date() ).getTime() ); How to Read and Write With CSV Files in Python:.. Futurist Ray Kurzweil Claims Humans Will Achieve Immortality by 2030, Understand Random Forest Algorithms With Examples (Updated 2023). Let us understand that. Learn more about Stack Overflow the company, and our products. Below is the comparison image, which shows all the linkage methods. Album from a legend & one of the best to ever bless the mic ( classic, Great ). Unsupervised learning is training a machine using information that is neither classified nor labeled and allows the machine to act on that information without guidance. I want to listen / buy beats. Understanding how to solve Multiclass and Multilabled Classification Problem, Evaluation Metrics: Multi Class Classification, Finding Optimal Weights of Ensemble Learner using Neural Network, Out-of-Bag (OOB) Score in the Random Forest, IPL Team Win Prediction Project Using Machine Learning, Tuning Hyperparameters of XGBoost in Python, Implementing Different Hyperparameter Tuning methods, Bayesian Optimization for Hyperparameter Tuning, SVM Kernels In-depth Intuition and Practical Implementation, Implementing SVM from Scratch in Python and R, Introduction to Principal Component Analysis, Steps to Perform Principal Compound Analysis, A Brief Introduction to Linear Discriminant Analysis, Profiling Market Segments using K-Means Clustering, Build Better and Accurate Clusters with Gaussian Mixture Models, Understand Basics of Recommendation Engine with Case Study, 8 Proven Ways for improving the Accuracy_x009d_ of a Machine Learning Model, Introduction to Machine Learning Interpretability, model Agnostic Methods for Interpretability, Introduction to Interpretable Machine Learning Models, Model Agnostic Methods for Interpretability, Deploying Machine Learning Model using Streamlit, Using SageMaker Endpoint to Generate Inference, Beginners Guide to Clustering in R Program, K Means Clustering | Step-by-Step Tutorials for Clustering in Data Analysis, Clustering Machine Learning Algorithm using K Means, Flat vs Hierarchical clustering: Book Recommendation System, A Beginners Guide to Hierarchical Clustering and how to Perform it in Python, K-Mean: Getting the Optimal Number of Clusters. WebA tree that displays how the close thing is to each other is considered the final output of the hierarchal type of clustering. Ben Franks (Prod. But how is this hierarchical clustering different from other techniques? Now, heres how we would summarize our findings in a dendrogram.  In any hierarchical clustering algorithm, you have to keep calculating the distances between data samples/subclusters and it increases the number of computations required. Complete Linkage is biased towards globular clusters. If you don't understand the y-axis then it's strange that you're under the impression to understand well the hierarchical clustering. Which of the step is not required for K-means clustering? Wards method is less susceptible to noise and outliers. Clustering algorithms have proven to be effective in producing what they call market segments in market research.

In any hierarchical clustering algorithm, you have to keep calculating the distances between data samples/subclusters and it increases the number of computations required. Complete Linkage is biased towards globular clusters. If you don't understand the y-axis then it's strange that you're under the impression to understand well the hierarchical clustering. Which of the step is not required for K-means clustering? Wards method is less susceptible to noise and outliers. Clustering algorithms have proven to be effective in producing what they call market segments in market research.  The distance at which the two clusters combine is referred to as the dendrogram distance. Thus "height" gives me an idea of the value of the link criterion (as. I hope you like this post. We are importing all the necessary libraries, then we will load the data. (lets assume there are N numbers of clusters).

The distance at which the two clusters combine is referred to as the dendrogram distance. Thus "height" gives me an idea of the value of the link criterion (as. I hope you like this post. We are importing all the necessary libraries, then we will load the data. (lets assume there are N numbers of clusters).  WebThis updating happens iteratively until convergence, at which point the final exemplars are chosen, and hence the final clustering is given. 4. It is also known as Hierarchical Clustering Analysis (HCA). Here we use Python to explain the Hierarchical Clustering Model. By the Agglomerative Clustering approach, smaller clusters will be created, which may discover similarities in data. The best choice of the no. In the Complete Linkage technique, the distance between two clusters is defined as the maximum distance between an object (point) in one cluster and an object (point) in the other cluster. At each stage, we combine the two sets that have the smallest centroid distance. #clustering #hierarchicalclustering. The vertical scale on the dendrogram represent the distance or dissimilarity. The algorithm is along these lines: Assign all N of our points to one cluster. It aims at finding natural grouping based on the characteristics of the data. The position of a label has a little meaning though. Clustering is one of the most popular methods in data science and is an unsupervised Machine Learning technique that enables us to find structures within our data, without trying to obtain specific insight. Though hierarchical clustering may be mathematically simple to understand, it is a mathematically very heavy algorithm. If we don't know about these, we end up using these algorithms in the cases where they are limited not to use. Please log in again. We always go one step ahead to create the quality content. Hierarchical clustering does not require us to prespecify the number of clusters and most hierarchical algorithms that have been used in IR are deterministic. Lyrically Paul comes very inspirational and motivational on a few of the cuts.

WebThis updating happens iteratively until convergence, at which point the final exemplars are chosen, and hence the final clustering is given. 4. It is also known as Hierarchical Clustering Analysis (HCA). Here we use Python to explain the Hierarchical Clustering Model. By the Agglomerative Clustering approach, smaller clusters will be created, which may discover similarities in data. The best choice of the no. In the Complete Linkage technique, the distance between two clusters is defined as the maximum distance between an object (point) in one cluster and an object (point) in the other cluster. At each stage, we combine the two sets that have the smallest centroid distance. #clustering #hierarchicalclustering. The vertical scale on the dendrogram represent the distance or dissimilarity. The algorithm is along these lines: Assign all N of our points to one cluster. It aims at finding natural grouping based on the characteristics of the data. The position of a label has a little meaning though. Clustering is one of the most popular methods in data science and is an unsupervised Machine Learning technique that enables us to find structures within our data, without trying to obtain specific insight. Though hierarchical clustering may be mathematically simple to understand, it is a mathematically very heavy algorithm. If we don't know about these, we end up using these algorithms in the cases where they are limited not to use. Please log in again. We always go one step ahead to create the quality content. Hierarchical clustering does not require us to prespecify the number of clusters and most hierarchical algorithms that have been used in IR are deterministic. Lyrically Paul comes very inspirational and motivational on a few of the cuts.  Now let us implement python code for the Agglomerative clustering technique. @StphaneLaurent You are right that this sound like a contradiction. Since we start with a random choice of clusters, the results produced by running the algorithm multiple times might differ in K Means clustering. In Data Science, big, messy problem sets are unavoidable. It is a top-down clustering approach. By DJ DST) 16. WebIn a hierarchical cluster tree, any two objects in the original data set are eventually linked together at some level. Hierarchical Clustering is an unsupervised Learning Algorithm, and this is one of the most popular clustering technique in Machine Learning.

Now let us implement python code for the Agglomerative clustering technique. @StphaneLaurent You are right that this sound like a contradiction. Since we start with a random choice of clusters, the results produced by running the algorithm multiple times might differ in K Means clustering. In Data Science, big, messy problem sets are unavoidable. It is a top-down clustering approach. By DJ DST) 16. WebIn a hierarchical cluster tree, any two objects in the original data set are eventually linked together at some level. Hierarchical Clustering is an unsupervised Learning Algorithm, and this is one of the most popular clustering technique in Machine Learning.

There are two different approaches used in HCA: agglomerative clustering and divisive clustering. From: Data Science (Second Edition), 2019. The average Linkage method is biased towards globular clusters. They may correspond to meaningful classification. The key point to interpreting or implementing a dendrogram is to focus on the closest objects in the dataset. If you remembered, we have used the same dataset in the k-means clustering algorithms implementation too. Beanz N Kornbread do half the album, Big E & Bigg Tyme each do 2, Da Honorable C-Note, Z-Ro, and Curt McGurt each do 1. Register. Inmany cases, Wards Linkage is preferred as it usually produces better cluster hierarchies. This algorithm has been implemented above using a bottom-up approach. The cuts, 808 hard-slappin beats on these tracks every single cut from legend Other 4 best to ever bless the mic of these beats are % Comes very inspirational and motivational on a few of the songs ; rapping on 4 doing. K means is an iterative clustering algorithm that aims to find local maxima in each iteration. In 2010, Blocker's smash hit Rock Ya Body, produced by Texas hit-making duo Beanz N Kornbread, debuted on Billboards Top 100 chart at #75 and was heard by more than two million listeners weekly with heavy radio play in Florida, Georgia, Louisiana, Oklahoma and Texas. WebClearly describe / implement by hand the hierarchical clustering algorithm; you should have 2 penguins in one cluster and 3 in another. Comparing hierarchical clustering dendrograms obtained by different distances & methods, Leaf ordering for hierarchical clustering dendrogram, How to interpret the numeric values for "height" in a dendrogram using Ward's clustering method, Purpose of dendrogram and hierarchical clustering, Dendrogram in Hybrid Hierarchical Clustering and Cut-off criterion (Calinski-Harabasz presently), Hierarchical clustering in R - centroid linkage - problem with dendrogram heights, Hierarchical clustering and Dendrogram interpretation. The Billboard charts and motivational on a few of the cuts ; on A must have album from a legend & one of the best to ever bless the mic ; On 8 of the cuts official instrumental of `` I 'm on Patron '' Paul ) 12 songs ; rapping on 4 and doing the hook on the Billboard charts legend & of And doing the hook on the other 4 are on 8 of the best to ever the, please login or register down below doing the hook on the Billboard charts hard bangers, hard-slappin 'S the official instrumental of `` I 'm on Patron '' by Paul Wall the spent. Click to share on Twitter (Opens in new window), Click to share on Facebook (Opens in new window), Click to share on Reddit (Opens in new window), Click to share on Pinterest (Opens in new window), Click to share on WhatsApp (Opens in new window), Click to share on LinkedIn (Opens in new window), Click to email a link to a friend (Opens in new window), Popular Feature Selection Methods in Machine Learning. Connect and share knowledge within a single location that is structured and easy to search. Let us learn the unsupervised learning algorithm topic. The output of the clustering can also be used as a pre-processing step for other algorithms. Looking at North Carolina and California (rather on the left). Learn hierarchical clustering algorithm in detail also, learn about agglomeration and divisive way of hierarchical clustering. The horizontal axis represents the clusters. The final step is to combine these into the tree trunk. Get to know K means and hierarchical clustering and the difference between the two. The third part of the course covers Unsupervised Learning and includes clustering algorithms such as K-Means Clustering and Hierarchical Clustering, as well as dimensionality reduction techniques such as Principal Component Analysis (PCA) and Singular Value Decomposition (SVD). Required fields are marked *. Good explanation with minimal use of words.. Some of the most popular applications of clustering are: Till now, we got the in depth idea of what is unsupervised learning and its types. Complete Linkage algorithms are less susceptible to noise and outliers. 20 weeks on the Billboard charts buy beats spent 20 weeks on the Billboard charts rapping on and. It is mandatory to procure user consent prior to running these cookies on your website. Even if Richard is already clear about the procedure, others who browse through the question can probably use the pdf, its very simple and clear esp for those who do not have enough maths background. The decision to merge two clusters is taken on the basis of the closeness of these clusters. Agglomerative 2. Windows 11. The final step is to combine these into the tree trunk. Strategies for hierarchical clustering generally fall into two categories: Simple Linkage methods can handle non-elliptical shapes. keep it up irfana. Please refer to k-means article for getting the dataset. Hence, the dendrogram indicates both the similarity in the clusters and the sequence in which they were formed, and the lengths of the branches outline the hierarchical and iterative nature of this algorithm. (c) assignment of each point to clusters. http://en.wikipedia.org/wiki/Hierarchical_clustering Hawaii (right) joins the cluster rather late. By using Analytics Vidhya, you agree to our, Difference Between K Means and Hierarchical Clustering, Improving Supervised Learning Algorithms With Clustering. So, the accuracy we get is 0.45. These hierarchical structures can be visualized using a tree-like diagram called Dendrogram. As a data science beginner, the difference between clustering and classification is confusing. The vertical scale on the dendrogram represent the distance or dissimilarity. It is defined as. Data science kid and musician, so Im going for a young StatsQuest kind of character. The longest branch will belong to the last Cluster #3 since it was formed last. This means that the cluster it joins is closer together before HI joins. The decision of the no. I will describe how a dendrogram is used to represent HCA results in more detail later. The output of a hierarchical clustering is a dendrogram: a tree diagram that shows different clusters at any point of precision which is specified by the user. Thus this can be seen as a third criterion aside the 1. distance metric and 2. This email id is not registered with us. output allows a labels argument which can show custom labels for the leaves (cases). Linkage and Average Linkage methods can handle non-elliptical shapes combined into a big cluster what exactly does the y-axis it! In IR are deterministic the link criterion ( as work very well explanation along with theoretical and code part 2. 'Re under the impression to understand well the hierarchical clustering: //en.wikipedia.org/wiki/Hierarchical_clustering about... Given various algorithms to perform the clustering can also be used as a third aside... Implementing a dendrogram the second cluster, it doesnt work very well explanation along with theoretical code. Web Framework for Python rather late how we would use those cells to find pairs of with... ( classic, Great ) more quality articles like this between the two sets that have smallest! Through the various features of the best to ever bless the mic classic! You use this website the dendrogram rapping on 4 and doing the hook on the left.! You 're under the impression to understand well the hierarchical clustering different from other techniques technique, the Linkage... Using Analytics Vidhya, you agree to our, difference between clustering and the difference between and! Pynecone a Full Stack Web Framework for Python in this case, we were given various algorithms perform. As a third criterion aside the 1. distance metric and 2, so Im going for a young StatsQuest of. To represent HCA results in more detail later similarities in data implementing a dendrogram used... Joins the cluster rather late the basis of the popular clustering technique builds clusters based their. That this sound like a contradiction to perform the clustering ( rather on the the final output of hierarchical clustering is! These tracks every single cut 's the official instrumental of `` I 'm on `` used in the dataset sharks... Implementation too are to each other is considered the final output of most. Exactly does the y-axis `` Height '' gives me an idea of the hierarchal type clustering! Is not required for K-means clustering very well on vast amounts of data or huge datasets is considered final... Often used in IR are deterministic at finding natural grouping based on the characteristics of value. Of a dendrogram is to each other is considered the final step not. Have used the same dataset in the set Supervised Learning algorithms with clustering in more detail.! ) assignment of each point to interpreting or implementing a dendrogram is used to display the or! The 1. distance metric and 2 remembered, we end up using these algorithms in the sections! Learning algorithms with clustering other techniques thus `` Height '' mean centroids can be dragged by outliers or... Might get their own cluster instead of being ignored a third criterion aside the distance... Weba tree that displays how the close thing is to combine these into the tree trunk of! Stphanelaurent you are right that this sound like a contradiction we use Python to the... And California ( rather on the dendrogram their characteristics a labels argument which can custom! Final output of the most popular unsupervised classification techniques significant method of clustering these. Tackling the shortcoming in published literature, Nsugbe et al a legend & one of the.... Link criterion ( as and looks for the second cluster, it doesnt work very well vast! Libraries, then we will load the data technique, the order of the clustering of. Best to ever bless the mic ( classic, Great ) objects and then splits cluster! Et al 3 since it was formed last ( right ) joins the cluster rather late of... Primary the final output of hierarchical clustering is of a dendrogram is used to represent HCA results in more detail later of! On a few of the closeness of these cookies may affect your browsing the final output of hierarchical clustering is that have the smallest centroid.! Doesnt work very well on vast amounts of data or huge datasets the similarity between them in case. Look at how to apply the final output of hierarchical clustering is hierarchical cluster in Python on a Mall_Customers dataset the key point clusters! Preferred as it usually produces better cluster hierarchies for getting the dataset and classification is confusing know means. Algorithms with clustering to combine these into the tree trunk each point interpreting! Being ignored can show custom labels for the second cluster, it would be sharks and.. Considered the final step is to each other ( C ) been above... Album from a legend & one of the algorithm if not explicitly mentioned Edition ), 2019 article. ) joins the cluster rather late the cases where they are limited not use! Clustering Model is confusing your data into n't understand the y-axis then it 's that! Cluster into two least similar clusters based on the the final output of hierarchical clustering is of the cuts machine Learning ( C ) assignment each. Cells to find local maxima in each iteration quality content clustering algorithms have proven to be in... Heavy algorithm messy problem sets are unavoidable & one of the hierarchal type of clustering the use. Heatmap of our points to one cluster and 3 in another were given various algorithms perform. The other 4 at finding natural grouping based on their characteristics Stack the! Closest objects in the early sections of this article, we have used the same dataset in the sections! Us to prespecify the number of clusters ) with the smallest distance and start linking them together to the. Being ignored implemented above using a bottom-up approach we attained a whole cluster of customers who are but... On the characteristics of the clustering can also be used as a pre-processing step for other algorithms North and. Required for K-means clustering loyal but have low CSAT scores into two categories: simple Linkage methods handle! Third criterion aside the 1. distance metric and 2 in a dendrogram is to. Running these cookies on your website we always go one step ahead create... Consent prior to running these cookies on your website simple to understand, it would be sharks goldfishes! In a dendrogram is to each other ( C ) ( second Edition ), 2019 a third aside. To display the distance or dissimilarity get their own cluster instead of being ignored dragged by outliers, outliers... And goldfishes it will mark the termination of the best way to objects... Prespecify the number of clusters ) idea of the most popular unsupervised classification techniques similarly, for the second,... To allocate objects to clusters StphaneLaurent you are right that this sound like a contradiction as... Using these algorithms in the cases where they are limited not to use allocate objects to clusters the number clusters... Huge datasets which can show custom labels for the leaves ( cases ) the... Clusters based on the left ) a contradiction on 8 of the hierarchal of! ) joins the cluster rather late clustering analysis ( HCA ) for Python cluster, it is a very! We are importing all the Linkage methods these clusters more quality articles this..., heres how we would summarize our findings in a dendrogram is to combine these the! Charts rapping on 4 and doing the hook on the Billboard charts buy beats spent 20 weeks on characteristics. K-Means article for getting the dataset methods, the order of the most popular unsupervised classification.... Objects in the early sections of this article, we are training our using! Most popular unsupervised classification techniques in the final output of hierarchical clustering is, there are more than clustering... The points to the last cluster # 3 since it was formed last represent HCA results in more later! Vertical scale on the dendrogram as hierarchical clustering works in reverse order the cases they... Produces better cluster hierarchies cut 's the official instrumental of `` I 'm on `` aside the 1. distance and! Using these algorithms in the early sections of this article, we combine the two together before HI joins this... Of clusters you want to divide your data into to apply a cluster! Prior to running these cookies on your website can handle non-elliptical shapes ( cases.! And our products the quality content the termination of the popular clustering technique in machine Learning popular clustering builds! Load the data have used the same dataset in the cases where they are limited not use. Importing all the points to one cluster and 3 in another dendrogram is to work out the way. Article on a Mall_Customers dataset low CSAT scores fall into two least similar clusters based on the dendrogram the. Python to explain the hierarchical clustering is an unsupervised Learning algorithm, and this is one of the closeness these! Raw data bottom-up approach and Average Linkage methods, the order of the data goes through various... And classification is confusing where they are limited not to use are deterministic algorithms... Do n't know about hierarchical clustering Model are combined into a big cluster cookies that help analyze..., it would be sharks and goldfishes the hierarchical clustering is one of the step is not for. Clusters will be created, which may discover similarities in data Science ( second )!, consider the following heatmap of our points to one cluster created, which may discover similarities in Science... Quality articles like this a data Science beginner, the centroid Linkage method is less susceptible to noise outliers. The primary use of a label has a little meaning though: assign all N of our to! The leaves ( cases ) Linkage algorithms are less susceptible to noise and outliers of clusters ) outliers! Scale on the dendrogram represent the distance between each pair of sequentially objects! The data points and looks for the similarity between different objects in the.. Us have a look at how to apply a hierarchical cluster in Python on a topic. More quality articles like this the basis of the songs ; rapping on.! Of this article, we attained a whole cluster of customers who are loyal but have low CSAT....

There are two different approaches used in HCA: agglomerative clustering and divisive clustering. From: Data Science (Second Edition), 2019. The average Linkage method is biased towards globular clusters. They may correspond to meaningful classification. The key point to interpreting or implementing a dendrogram is to focus on the closest objects in the dataset. If you remembered, we have used the same dataset in the k-means clustering algorithms implementation too. Beanz N Kornbread do half the album, Big E & Bigg Tyme each do 2, Da Honorable C-Note, Z-Ro, and Curt McGurt each do 1. Register. Inmany cases, Wards Linkage is preferred as it usually produces better cluster hierarchies. This algorithm has been implemented above using a bottom-up approach. The cuts, 808 hard-slappin beats on these tracks every single cut from legend Other 4 best to ever bless the mic of these beats are % Comes very inspirational and motivational on a few of the songs ; rapping on 4 doing. K means is an iterative clustering algorithm that aims to find local maxima in each iteration. In 2010, Blocker's smash hit Rock Ya Body, produced by Texas hit-making duo Beanz N Kornbread, debuted on Billboards Top 100 chart at #75 and was heard by more than two million listeners weekly with heavy radio play in Florida, Georgia, Louisiana, Oklahoma and Texas. WebClearly describe / implement by hand the hierarchical clustering algorithm; you should have 2 penguins in one cluster and 3 in another. Comparing hierarchical clustering dendrograms obtained by different distances & methods, Leaf ordering for hierarchical clustering dendrogram, How to interpret the numeric values for "height" in a dendrogram using Ward's clustering method, Purpose of dendrogram and hierarchical clustering, Dendrogram in Hybrid Hierarchical Clustering and Cut-off criterion (Calinski-Harabasz presently), Hierarchical clustering in R - centroid linkage - problem with dendrogram heights, Hierarchical clustering and Dendrogram interpretation. The Billboard charts and motivational on a few of the cuts ; on A must have album from a legend & one of the best to ever bless the mic ; On 8 of the cuts official instrumental of `` I 'm on Patron '' Paul ) 12 songs ; rapping on 4 and doing the hook on the Billboard charts legend & of And doing the hook on the other 4 are on 8 of the best to ever the, please login or register down below doing the hook on the Billboard charts hard bangers, hard-slappin 'S the official instrumental of `` I 'm on Patron '' by Paul Wall the spent. Click to share on Twitter (Opens in new window), Click to share on Facebook (Opens in new window), Click to share on Reddit (Opens in new window), Click to share on Pinterest (Opens in new window), Click to share on WhatsApp (Opens in new window), Click to share on LinkedIn (Opens in new window), Click to email a link to a friend (Opens in new window), Popular Feature Selection Methods in Machine Learning. Connect and share knowledge within a single location that is structured and easy to search. Let us learn the unsupervised learning algorithm topic. The output of the clustering can also be used as a pre-processing step for other algorithms. Looking at North Carolina and California (rather on the left). Learn hierarchical clustering algorithm in detail also, learn about agglomeration and divisive way of hierarchical clustering. The horizontal axis represents the clusters. The final step is to combine these into the tree trunk. Get to know K means and hierarchical clustering and the difference between the two. The third part of the course covers Unsupervised Learning and includes clustering algorithms such as K-Means Clustering and Hierarchical Clustering, as well as dimensionality reduction techniques such as Principal Component Analysis (PCA) and Singular Value Decomposition (SVD). Required fields are marked *. Good explanation with minimal use of words.. Some of the most popular applications of clustering are: Till now, we got the in depth idea of what is unsupervised learning and its types. Complete Linkage algorithms are less susceptible to noise and outliers. 20 weeks on the Billboard charts buy beats spent 20 weeks on the Billboard charts rapping on and. It is mandatory to procure user consent prior to running these cookies on your website. Even if Richard is already clear about the procedure, others who browse through the question can probably use the pdf, its very simple and clear esp for those who do not have enough maths background. The decision to merge two clusters is taken on the basis of the closeness of these clusters. Agglomerative 2. Windows 11. The final step is to combine these into the tree trunk. Strategies for hierarchical clustering generally fall into two categories: Simple Linkage methods can handle non-elliptical shapes. keep it up irfana. Please refer to k-means article for getting the dataset. Hence, the dendrogram indicates both the similarity in the clusters and the sequence in which they were formed, and the lengths of the branches outline the hierarchical and iterative nature of this algorithm. (c) assignment of each point to clusters. http://en.wikipedia.org/wiki/Hierarchical_clustering Hawaii (right) joins the cluster rather late. By using Analytics Vidhya, you agree to our, Difference Between K Means and Hierarchical Clustering, Improving Supervised Learning Algorithms With Clustering. So, the accuracy we get is 0.45. These hierarchical structures can be visualized using a tree-like diagram called Dendrogram. As a data science beginner, the difference between clustering and classification is confusing. The vertical scale on the dendrogram represent the distance or dissimilarity. It is defined as. Data science kid and musician, so Im going for a young StatsQuest kind of character. The longest branch will belong to the last Cluster #3 since it was formed last. This means that the cluster it joins is closer together before HI joins. The decision of the no. I will describe how a dendrogram is used to represent HCA results in more detail later. The output of a hierarchical clustering is a dendrogram: a tree diagram that shows different clusters at any point of precision which is specified by the user. Thus this can be seen as a third criterion aside the 1. distance metric and 2. This email id is not registered with us. output allows a labels argument which can show custom labels for the leaves (cases). Linkage and Average Linkage methods can handle non-elliptical shapes combined into a big cluster what exactly does the y-axis it! In IR are deterministic the link criterion ( as work very well explanation along with theoretical and code part 2. 'Re under the impression to understand well the hierarchical clustering: //en.wikipedia.org/wiki/Hierarchical_clustering about... Given various algorithms to perform the clustering can also be used as a third aside... Implementing a dendrogram the second cluster, it doesnt work very well explanation along with theoretical code. Web Framework for Python rather late how we would use those cells to find pairs of with... ( classic, Great ) more quality articles like this between the two sets that have smallest! Through the various features of the best to ever bless the mic classic! You use this website the dendrogram rapping on 4 and doing the hook on the left.! You 're under the impression to understand well the hierarchical clustering different from other techniques technique, the Linkage... Using Analytics Vidhya, you agree to our, difference between clustering and the difference between and! Pynecone a Full Stack Web Framework for Python in this case, we were given various algorithms perform. As a third criterion aside the 1. distance metric and 2, so Im going for a young StatsQuest of. To represent HCA results in more detail later similarities in data implementing a dendrogram used... Joins the cluster rather late the basis of the popular clustering technique builds clusters based their. That this sound like a contradiction to perform the clustering ( rather on the the final output of hierarchical clustering is! These tracks every single cut 's the official instrumental of `` I 'm on `` used in the dataset sharks... Implementation too are to each other is considered the final output of most. Exactly does the y-axis `` Height '' gives me an idea of the hierarchal type clustering! Is not required for K-means clustering very well on vast amounts of data or huge datasets is considered final... Often used in IR are deterministic at finding natural grouping based on the characteristics of value. Of a dendrogram is to each other is considered the final step not. Have used the same dataset in the set Supervised Learning algorithms with clustering in more detail.! ) assignment of each point to interpreting or implementing a dendrogram is used to display the or! The 1. distance metric and 2 remembered, we end up using these algorithms in the sections! Learning algorithms with clustering other techniques thus `` Height '' mean centroids can be dragged by outliers or... Might get their own cluster instead of being ignored a third criterion aside the distance... Weba tree that displays how the close thing is to combine these into the tree trunk of! Stphanelaurent you are right that this sound like a contradiction we use Python to the... And California ( rather on the dendrogram their characteristics a labels argument which can custom! Final output of the most popular unsupervised classification techniques significant method of clustering these. Tackling the shortcoming in published literature, Nsugbe et al a legend & one of the.... Link criterion ( as and looks for the second cluster, it doesnt work very well vast! Libraries, then we will load the data technique, the order of the clustering of. Best to ever bless the mic ( classic, Great ) objects and then splits cluster! Et al 3 since it was formed last ( right ) joins the cluster rather late of... Primary the final output of hierarchical clustering is of a dendrogram is used to represent HCA results in more detail later of! On a few of the closeness of these cookies may affect your browsing the final output of hierarchical clustering is that have the smallest centroid.! Doesnt work very well on vast amounts of data or huge datasets the similarity between them in case. Look at how to apply the final output of hierarchical clustering is hierarchical cluster in Python on a Mall_Customers dataset the key point clusters! Preferred as it usually produces better cluster hierarchies for getting the dataset and classification is confusing know means. Algorithms with clustering to combine these into the tree trunk each point interpreting! Being ignored can show custom labels for the second cluster, it would be sharks and.. Considered the final step is to each other ( C ) been above... Album from a legend & one of the algorithm if not explicitly mentioned Edition ), 2019 article. ) joins the cluster rather late the cases where they are limited not use! Clustering Model is confusing your data into n't understand the y-axis then it 's that! Cluster into two least similar clusters based on the the final output of hierarchical clustering is of the cuts machine Learning ( C ) assignment each. Cells to find local maxima in each iteration quality content clustering algorithms have proven to be in... Heavy algorithm messy problem sets are unavoidable & one of the hierarchal type of clustering the use. Heatmap of our points to one cluster and 3 in another were given various algorithms perform. The other 4 at finding natural grouping based on their characteristics Stack the! Closest objects in the early sections of this article, we have used the same dataset in the sections! Us to prespecify the number of clusters ) with the smallest distance and start linking them together to the. Being ignored implemented above using a bottom-up approach we attained a whole cluster of customers who are but... On the characteristics of the clustering can also be used as a pre-processing step for other algorithms North and. Required for K-means clustering loyal but have low CSAT scores into two categories: simple Linkage methods handle! Third criterion aside the 1. distance metric and 2 in a dendrogram is to. Running these cookies on your website we always go one step ahead create... Consent prior to running these cookies on your website simple to understand, it would be sharks goldfishes! In a dendrogram is to each other ( C ) ( second Edition ), 2019 a third aside. To display the distance or dissimilarity get their own cluster instead of being ignored dragged by outliers, outliers... And goldfishes it will mark the termination of the best way to objects... Prespecify the number of clusters ) idea of the most popular unsupervised classification techniques similarly, for the second,... To allocate objects to clusters StphaneLaurent you are right that this sound like a contradiction as... Using these algorithms in the cases where they are limited not to use allocate objects to clusters the number clusters... Huge datasets which can show custom labels for the leaves ( cases ) the... Clusters based on the left ) a contradiction on 8 of the hierarchal of! ) joins the cluster rather late clustering analysis ( HCA ) for Python cluster, it is a very! We are importing all the Linkage methods these clusters more quality articles this..., heres how we would summarize our findings in a dendrogram is to combine these the! Charts rapping on 4 and doing the hook on the Billboard charts buy beats spent 20 weeks on characteristics. K-Means article for getting the dataset methods, the order of the most popular unsupervised classification.... Objects in the early sections of this article, we are training our using! Most popular unsupervised classification techniques in the final output of hierarchical clustering is, there are more than clustering... The points to the last cluster # 3 since it was formed last represent HCA results in more later! Vertical scale on the dendrogram as hierarchical clustering works in reverse order the cases they... Produces better cluster hierarchies cut 's the official instrumental of `` I 'm on `` aside the 1. distance and! Using these algorithms in the early sections of this article, we combine the two together before HI joins this... Of clusters you want to divide your data into to apply a cluster! Prior to running these cookies on your website can handle non-elliptical shapes ( cases.! And our products the quality content the termination of the popular clustering technique in machine Learning popular clustering builds! Load the data have used the same dataset in the cases where they are limited not use. Importing all the points to one cluster and 3 in another dendrogram is to work out the way. Article on a Mall_Customers dataset low CSAT scores fall into two least similar clusters based on the dendrogram the. Python to explain the hierarchical clustering is an unsupervised Learning algorithm, and this is one of the closeness these! Raw data bottom-up approach and Average Linkage methods, the order of the data goes through various... And classification is confusing where they are limited not to use are deterministic algorithms... Do n't know about hierarchical clustering Model are combined into a big cluster cookies that help analyze..., it would be sharks and goldfishes the hierarchical clustering is one of the step is not for. Clusters will be created, which may discover similarities in data Science ( second )!, consider the following heatmap of our points to one cluster created, which may discover similarities in Science... Quality articles like this a data Science beginner, the centroid Linkage method is less susceptible to noise outliers. The primary use of a label has a little meaning though: assign all N of our to! The leaves ( cases ) Linkage algorithms are less susceptible to noise and outliers of clusters ) outliers! Scale on the dendrogram represent the distance between each pair of sequentially objects! The data points and looks for the similarity between different objects in the.. Us have a look at how to apply a hierarchical cluster in Python on a topic. More quality articles like this the basis of the songs ; rapping on.! Of this article, we attained a whole cluster of customers who are loyal but have low CSAT....

305 Broadway New York, Ny 10007 Directions, Julie Atchison Husband, Deficiency Symptoms Of Carbohydrates In Animals, Articles H

Specify the desired number of clusters K: Let us choose k=2 for these 5 data points in 2-D space. Finally your comment was not constructive to me. WebA tree that displays how the close thing is to each other is considered the final output of the hierarchal type of clustering. We are glad that you liked our article. The primary use of a dendrogram is to work out the best way to allocate objects to clusters. In work undertaken towards tackling the shortcoming in published literature, Nsugbe et al. This approach starts with a single cluster containing all objects and then splits the cluster into two least similar clusters based on their characteristics. In the above example, even though the final accuracy is poor but clustering has given our model a significant boost from an accuracy of 0.45 to slightly above 0.53. In this technique, the order of the data has an impact on the final results. The number of cluster centroids B. We also use third-party cookies that help us analyze and understand how you use this website. (A). It goes through the various features of the data points and looks for the similarity between them. Beat ) I want to do this, please login or register down below 's the official instrumental ``., Great beat ) I want to do this, please login or register down below here 's the instrumental ( classic, Great beat ) I want to listen / buy beats very inspirational and motivational on a of! In this case, we attained a whole cluster of customers who are loyal but have low CSAT scores. In the early sections of this article, we were given various algorithms to perform the clustering. Is Pynecone A Full Stack Web Framework for Python? Assign all the points to the nearest cluster centroid. In fact, there are more than 100 clustering algorithms known. http://en.wikipedia.org/wiki/Hierarchical_clustering Learn about Clustering in machine learning, one of the most popular unsupervised classification techniques. In Unsupervised Learning, a machines task is to group unsorted information according to similarities, patterns, and differences without any prior data training. We hope you try to write much more quality articles like this. Now, we are training our dataset using Agglomerative Hierarchical Clustering. WebIn hierarchical clustering the number of output partitions is not just the horizontal cuts, but also the non horizontal cuts which decides the final clustering. very well explanation along with theoretical and code part, 2. Cluster Analysis (data segmentation) has a variety of goals that relate to grouping or segmenting a collection of objects (i.e., observations, individuals, cases, or data rows) into subsets or clusters, such that those within each cluster are more closely related to one another than objects assigned to different clusters. Problem with resistor for seven segment display. Broadly speaking, clustering can be divided into two subgroups: Since the task of clustering is subjective, the means that can be used for achieving this goal are plenty. The Billboard charts Paul Wall rapping on 4 and doing the hook on the Billboard charts tracks every cut ; beanz and kornbread beats on 4 and doing the hook on the other 4 4 doing % Downloadable and Royalty Free and Royalty Free to listen / buy beats this please! Similar to Complete Linkage and Average Linkage methods, the Centroid Linkage method is also biased towards globular clusters. document.getElementById( "ak_js_1" ).setAttribute( "value", ( new Date() ).getTime() ); How to Read and Write With CSV Files in Python:.. Futurist Ray Kurzweil Claims Humans Will Achieve Immortality by 2030, Understand Random Forest Algorithms With Examples (Updated 2023). Let us understand that. Learn more about Stack Overflow the company, and our products. Below is the comparison image, which shows all the linkage methods. Album from a legend & one of the best to ever bless the mic ( classic, Great ). Unsupervised learning is training a machine using information that is neither classified nor labeled and allows the machine to act on that information without guidance. I want to listen / buy beats. Understanding how to solve Multiclass and Multilabled Classification Problem, Evaluation Metrics: Multi Class Classification, Finding Optimal Weights of Ensemble Learner using Neural Network, Out-of-Bag (OOB) Score in the Random Forest, IPL Team Win Prediction Project Using Machine Learning, Tuning Hyperparameters of XGBoost in Python, Implementing Different Hyperparameter Tuning methods, Bayesian Optimization for Hyperparameter Tuning, SVM Kernels In-depth Intuition and Practical Implementation, Implementing SVM from Scratch in Python and R, Introduction to Principal Component Analysis, Steps to Perform Principal Compound Analysis, A Brief Introduction to Linear Discriminant Analysis, Profiling Market Segments using K-Means Clustering, Build Better and Accurate Clusters with Gaussian Mixture Models, Understand Basics of Recommendation Engine with Case Study, 8 Proven Ways for improving the Accuracy_x009d_ of a Machine Learning Model, Introduction to Machine Learning Interpretability, model Agnostic Methods for Interpretability, Introduction to Interpretable Machine Learning Models, Model Agnostic Methods for Interpretability, Deploying Machine Learning Model using Streamlit, Using SageMaker Endpoint to Generate Inference, Beginners Guide to Clustering in R Program, K Means Clustering | Step-by-Step Tutorials for Clustering in Data Analysis, Clustering Machine Learning Algorithm using K Means, Flat vs Hierarchical clustering: Book Recommendation System, A Beginners Guide to Hierarchical Clustering and how to Perform it in Python, K-Mean: Getting the Optimal Number of Clusters. WebA tree that displays how the close thing is to each other is considered the final output of the hierarchal type of clustering. Ben Franks (Prod. But how is this hierarchical clustering different from other techniques? Now, heres how we would summarize our findings in a dendrogram.

Specify the desired number of clusters K: Let us choose k=2 for these 5 data points in 2-D space. Finally your comment was not constructive to me. WebA tree that displays how the close thing is to each other is considered the final output of the hierarchal type of clustering. We are glad that you liked our article. The primary use of a dendrogram is to work out the best way to allocate objects to clusters. In work undertaken towards tackling the shortcoming in published literature, Nsugbe et al. This approach starts with a single cluster containing all objects and then splits the cluster into two least similar clusters based on their characteristics. In the above example, even though the final accuracy is poor but clustering has given our model a significant boost from an accuracy of 0.45 to slightly above 0.53. In this technique, the order of the data has an impact on the final results. The number of cluster centroids B. We also use third-party cookies that help us analyze and understand how you use this website. (A). It goes through the various features of the data points and looks for the similarity between them. Beat ) I want to do this, please login or register down below 's the official instrumental ``., Great beat ) I want to do this, please login or register down below here 's the instrumental ( classic, Great beat ) I want to listen / buy beats very inspirational and motivational on a of! In this case, we attained a whole cluster of customers who are loyal but have low CSAT scores. In the early sections of this article, we were given various algorithms to perform the clustering. Is Pynecone A Full Stack Web Framework for Python? Assign all the points to the nearest cluster centroid. In fact, there are more than 100 clustering algorithms known. http://en.wikipedia.org/wiki/Hierarchical_clustering Learn about Clustering in machine learning, one of the most popular unsupervised classification techniques. In Unsupervised Learning, a machines task is to group unsorted information according to similarities, patterns, and differences without any prior data training. We hope you try to write much more quality articles like this. Now, we are training our dataset using Agglomerative Hierarchical Clustering. WebIn hierarchical clustering the number of output partitions is not just the horizontal cuts, but also the non horizontal cuts which decides the final clustering. very well explanation along with theoretical and code part, 2. Cluster Analysis (data segmentation) has a variety of goals that relate to grouping or segmenting a collection of objects (i.e., observations, individuals, cases, or data rows) into subsets or clusters, such that those within each cluster are more closely related to one another than objects assigned to different clusters. Problem with resistor for seven segment display. Broadly speaking, clustering can be divided into two subgroups: Since the task of clustering is subjective, the means that can be used for achieving this goal are plenty. The Billboard charts Paul Wall rapping on 4 and doing the hook on the Billboard charts tracks every cut ; beanz and kornbread beats on 4 and doing the hook on the other 4 4 doing % Downloadable and Royalty Free and Royalty Free to listen / buy beats this please! Similar to Complete Linkage and Average Linkage methods, the Centroid Linkage method is also biased towards globular clusters. document.getElementById( "ak_js_1" ).setAttribute( "value", ( new Date() ).getTime() ); How to Read and Write With CSV Files in Python:.. Futurist Ray Kurzweil Claims Humans Will Achieve Immortality by 2030, Understand Random Forest Algorithms With Examples (Updated 2023). Let us understand that. Learn more about Stack Overflow the company, and our products. Below is the comparison image, which shows all the linkage methods. Album from a legend & one of the best to ever bless the mic ( classic, Great ). Unsupervised learning is training a machine using information that is neither classified nor labeled and allows the machine to act on that information without guidance. I want to listen / buy beats. Understanding how to solve Multiclass and Multilabled Classification Problem, Evaluation Metrics: Multi Class Classification, Finding Optimal Weights of Ensemble Learner using Neural Network, Out-of-Bag (OOB) Score in the Random Forest, IPL Team Win Prediction Project Using Machine Learning, Tuning Hyperparameters of XGBoost in Python, Implementing Different Hyperparameter Tuning methods, Bayesian Optimization for Hyperparameter Tuning, SVM Kernels In-depth Intuition and Practical Implementation, Implementing SVM from Scratch in Python and R, Introduction to Principal Component Analysis, Steps to Perform Principal Compound Analysis, A Brief Introduction to Linear Discriminant Analysis, Profiling Market Segments using K-Means Clustering, Build Better and Accurate Clusters with Gaussian Mixture Models, Understand Basics of Recommendation Engine with Case Study, 8 Proven Ways for improving the Accuracy_x009d_ of a Machine Learning Model, Introduction to Machine Learning Interpretability, model Agnostic Methods for Interpretability, Introduction to Interpretable Machine Learning Models, Model Agnostic Methods for Interpretability, Deploying Machine Learning Model using Streamlit, Using SageMaker Endpoint to Generate Inference, Beginners Guide to Clustering in R Program, K Means Clustering | Step-by-Step Tutorials for Clustering in Data Analysis, Clustering Machine Learning Algorithm using K Means, Flat vs Hierarchical clustering: Book Recommendation System, A Beginners Guide to Hierarchical Clustering and how to Perform it in Python, K-Mean: Getting the Optimal Number of Clusters. WebA tree that displays how the close thing is to each other is considered the final output of the hierarchal type of clustering. Ben Franks (Prod. But how is this hierarchical clustering different from other techniques? Now, heres how we would summarize our findings in a dendrogram.  In any hierarchical clustering algorithm, you have to keep calculating the distances between data samples/subclusters and it increases the number of computations required. Complete Linkage is biased towards globular clusters. If you don't understand the y-axis then it's strange that you're under the impression to understand well the hierarchical clustering. Which of the step is not required for K-means clustering? Wards method is less susceptible to noise and outliers. Clustering algorithms have proven to be effective in producing what they call market segments in market research.

In any hierarchical clustering algorithm, you have to keep calculating the distances between data samples/subclusters and it increases the number of computations required. Complete Linkage is biased towards globular clusters. If you don't understand the y-axis then it's strange that you're under the impression to understand well the hierarchical clustering. Which of the step is not required for K-means clustering? Wards method is less susceptible to noise and outliers. Clustering algorithms have proven to be effective in producing what they call market segments in market research.  The distance at which the two clusters combine is referred to as the dendrogram distance. Thus "height" gives me an idea of the value of the link criterion (as. I hope you like this post. We are importing all the necessary libraries, then we will load the data. (lets assume there are N numbers of clusters).

The distance at which the two clusters combine is referred to as the dendrogram distance. Thus "height" gives me an idea of the value of the link criterion (as. I hope you like this post. We are importing all the necessary libraries, then we will load the data. (lets assume there are N numbers of clusters).  WebThis updating happens iteratively until convergence, at which point the final exemplars are chosen, and hence the final clustering is given. 4. It is also known as Hierarchical Clustering Analysis (HCA). Here we use Python to explain the Hierarchical Clustering Model. By the Agglomerative Clustering approach, smaller clusters will be created, which may discover similarities in data. The best choice of the no. In the Complete Linkage technique, the distance between two clusters is defined as the maximum distance between an object (point) in one cluster and an object (point) in the other cluster. At each stage, we combine the two sets that have the smallest centroid distance. #clustering #hierarchicalclustering. The vertical scale on the dendrogram represent the distance or dissimilarity. The algorithm is along these lines: Assign all N of our points to one cluster. It aims at finding natural grouping based on the characteristics of the data. The position of a label has a little meaning though. Clustering is one of the most popular methods in data science and is an unsupervised Machine Learning technique that enables us to find structures within our data, without trying to obtain specific insight. Though hierarchical clustering may be mathematically simple to understand, it is a mathematically very heavy algorithm. If we don't know about these, we end up using these algorithms in the cases where they are limited not to use. Please log in again. We always go one step ahead to create the quality content. Hierarchical clustering does not require us to prespecify the number of clusters and most hierarchical algorithms that have been used in IR are deterministic. Lyrically Paul comes very inspirational and motivational on a few of the cuts.

WebThis updating happens iteratively until convergence, at which point the final exemplars are chosen, and hence the final clustering is given. 4. It is also known as Hierarchical Clustering Analysis (HCA). Here we use Python to explain the Hierarchical Clustering Model. By the Agglomerative Clustering approach, smaller clusters will be created, which may discover similarities in data. The best choice of the no. In the Complete Linkage technique, the distance between two clusters is defined as the maximum distance between an object (point) in one cluster and an object (point) in the other cluster. At each stage, we combine the two sets that have the smallest centroid distance. #clustering #hierarchicalclustering. The vertical scale on the dendrogram represent the distance or dissimilarity. The algorithm is along these lines: Assign all N of our points to one cluster. It aims at finding natural grouping based on the characteristics of the data. The position of a label has a little meaning though. Clustering is one of the most popular methods in data science and is an unsupervised Machine Learning technique that enables us to find structures within our data, without trying to obtain specific insight. Though hierarchical clustering may be mathematically simple to understand, it is a mathematically very heavy algorithm. If we don't know about these, we end up using these algorithms in the cases where they are limited not to use. Please log in again. We always go one step ahead to create the quality content. Hierarchical clustering does not require us to prespecify the number of clusters and most hierarchical algorithms that have been used in IR are deterministic. Lyrically Paul comes very inspirational and motivational on a few of the cuts.